As we step into the world of LLM-powered agents and multi-agent conversation frameworks holding out futuristic autonomous agents like AutoGen, and AutoGPT, while already working on AgentGPT, it should dawn upon us rather transparently that we are not looking at new shores traditionally marked by degrees of an evolutionary step but a giant leap into hitherto unexplored zones.

The access to such tools, embodied by the features of AutoGen, illustrates an exciting new avenue for easy-to-apply use of LLMs to revolutionize the work of data science. Imagine a world where your analytics workflows are no longer solo endeavors but collaborative symphonies, performed by a team of specialized virtual analysts each richer for additional expertise brought in to address complex data challenges. This new paradigm is not to replace human insight but rather augment human insight, formulating a synergistic partnership between humans and machines that speeds the generation of insights and also the delivery of data via projects with unheard-of efficiency.

But what does this mean for all involved? The plain imperative for adaptation: enhancing domain knowledge and honing sense-making abilities become critical as we navigate these new workflows to ensure that the integration of AI agents is purposeful. The hybrid workforce of AI agents and humans working together collaboratively has potential organizational implications too in terms of a more agile, innovative approach to data practice and problem-solving.

This journey, however, is by no means free of its challenges spanning new multi-agent AI frameworks, considerations around privacy, and data handling. These challenges assume the dimensions necessitating visionary change in data strategy and a forward-looking approach to boast competencies for better adaptability and actually embracing the change.

|Framework Options for creating Agents – A brief introduction

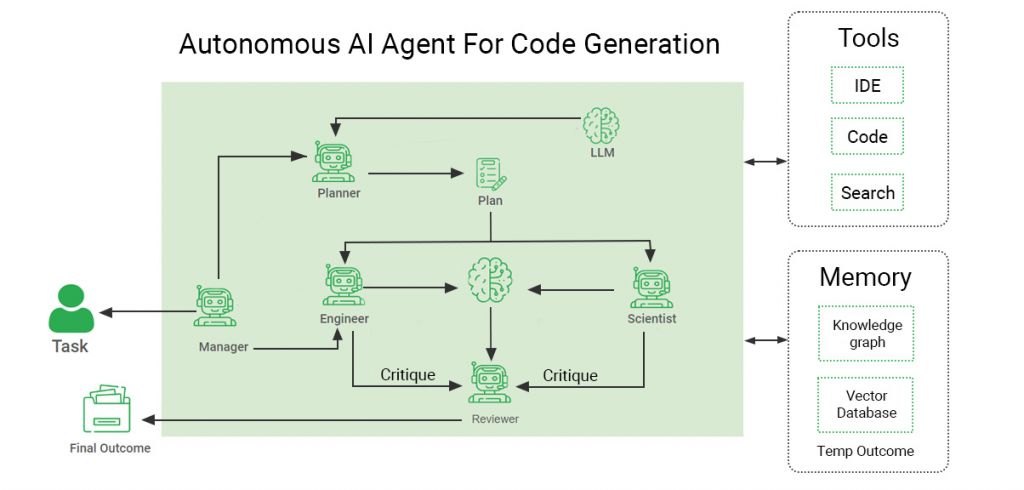

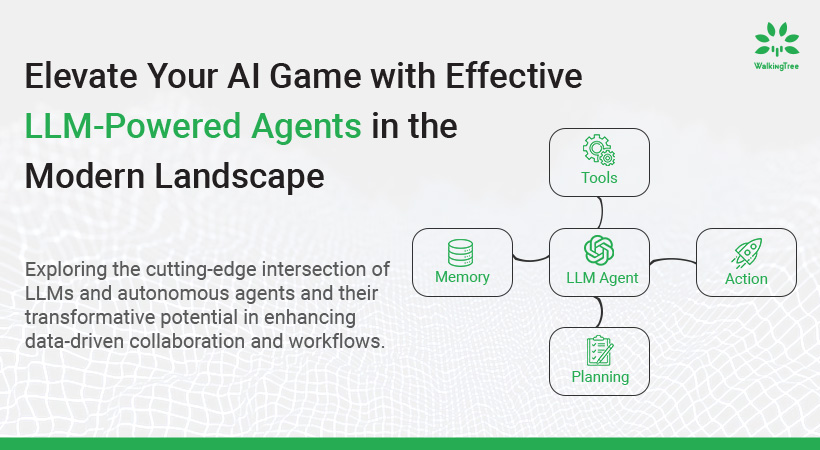

Within a given context, an “Autonomous AI Agent” is a specialized system that operates independently, makes decisions, performs actions with minimal or no human guidance, and observes their environment to learn from experiences and additional information.

In particular, we will look at frameworks Autogen, Langchain, and Llama-index, which provide a base to define autonomous agent workflows powered by LLMs. These frameworks lie at the frontier of AI development offering tools providing a backbone for developing complex AI systems capable of automating functions, analyzing data, and communicating with users in a more advanced way.

Autogen

Autogen is a cutting-edge framework designed to automate the creation of AI agents, focusing on generating code and workflows for AI applications. It leverages large language models to understand the requirements and generate corresponding solutions. Autogen simplifies the development process by providing a high-level abstraction layer enabling interaction with multiple versions of agents defined and working in collaboration to achieve common results. Autogen provides capabilities like Retrieval Augmented Agents, Teachable agents, and custom model agents which empower to bring different capabilities of agents on board. AutoGen also provides advanced LLM inference APIs, offering improved inference performance and cost-reduction capabilities.

Langchain

Langchain is a framework that integrates language models and defines a sequence of steps to implement the single or multi-agent behavior. , Langchain offers a robust set of capabilities for defining agents, providing comprehensive guides to assist users in leveraging its functionality effectively. Users can explore various features, such as building custom agents tailored to specific needs, implementing streaming for both intermediate steps and tokens, and creating agents that return structured output. Additionally, Langchain facilitates advanced usage of the AgentExecutor, allowing users to employ it as an iterator, handle parsing errors efficiently, retrieve intermediate steps, set limits on the maximum number of iterations, and define timeouts for agents. With these capabilities, Langchain empowers users to create sophisticated and efficient agent-based systems to tackle diverse tasks effectively.

Llama-index agents

LlamaIndex’s Data Agents, powered by large language models (LLMs), serve as intelligent knowledge workers capable of executing a range of tasks on diverse data types, spanning unstructured, semi-structured, and structured data. They go beyond traditional query engines by not only retrieving data but also dynamically ingesting and modifying data from various sources. Building a Data Agent involves core components such as a reasoning loop and tool abstractions. The reasoning loop dictates the agent’s decision-making process, determining which APIs or Tools to utilize, their sequence, and the parameters for each tool call. Various types of agents, including the OpenAI Function agent, ReAct agent, and LLMCompiler Agent, cater to different functionalities. Additionally, LlamaIndex offers a very powerful agent defining feature called Lower-Level Agent API for controlled step-wise execution, providing users with greater control over task creation and analysis of input/output at each step.

|How LLMs contribute to the Agents Ecosystem?

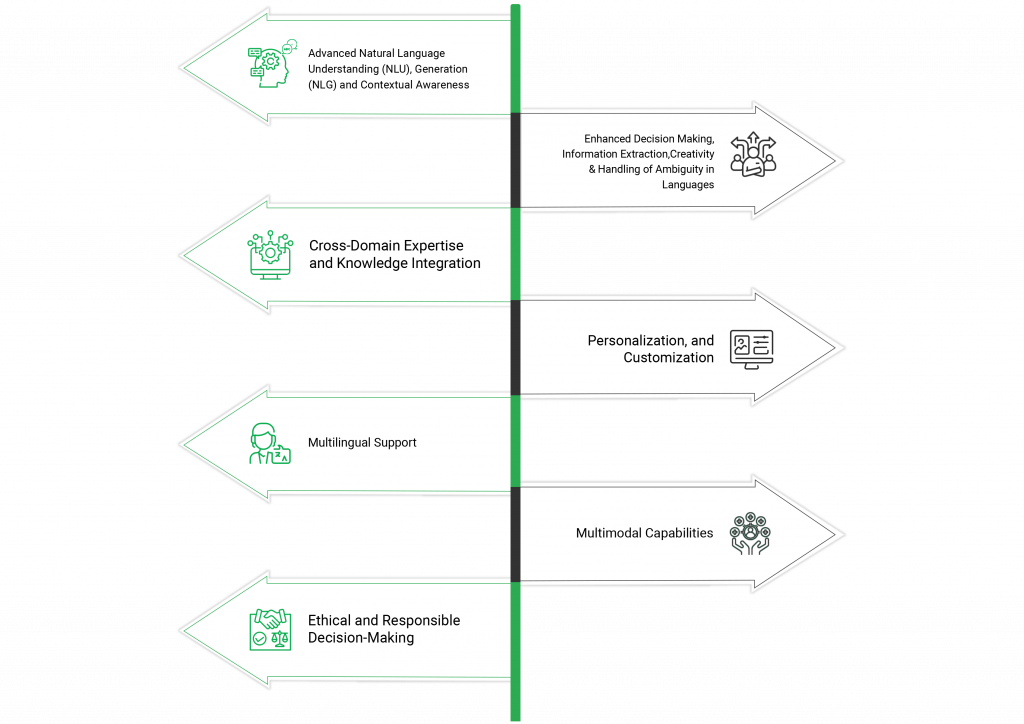

In the domain of autonomous agents, Large Language Models (LLMs) play a central role, reshaping the way these agents comprehend, engage with, and address human needs. Their integration into the agent ecosystem marks a shift towards intelligent systems capable of advanced Natural Language Understanding (NLU), Natural Language Generation (NLG), and contextual awareness. This integration empowers agents to interpret and generate human-like responses, facilitating more intuitive and effective interactions.

LLMs significantly enhance decision-making processes by assimilating vast amounts of information, enabling agents to make informed choices. Their innate creativity and adept handling of linguistic ambiguities further aid agents in navigating complex conversational nuances effortlessly.

Another notable advantage of LLMs is their cross-domain expertise and knowledge integration, allowing agents to draw from a broad spectrum of knowledge for versatile and adaptive responses across various domains.

Personalization and customization are core features of LLM-enabled agents, as they can tailor interactions to individual user preferences, histories, and contexts, fostering deeper connections and enhancing user satisfaction.

Multilingual support provided by LLMs ensures global accessibility, breaking down language barriers and making digital services more inclusive for users worldwide.

LLMs also enable agents to engage in multimodal interactions, understanding and generating responses across various media forms, enriching the interaction experience for users.

Ethical and responsible decision-making are paramount in LLM integration, guiding agents to operate within ethical boundaries, respect user privacy, and promote trust.

The collaboration between autonomous agents and LLMs opens up new possibilities, redefining how we interact with digital systems. Through advanced understanding, decision-making, personalization, and ethical considerations, LLMs set a new standard for intelligent agent interactions, making them more human-like, accessible, and ethically responsible.

| Key considerations for defining agents

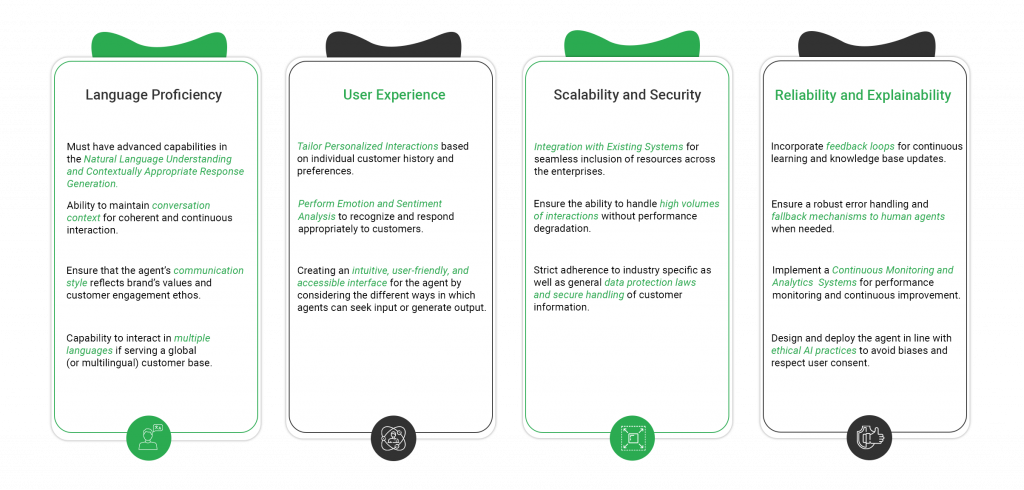

Defining autonomous agents that are both effective and ethical is of paramount importance and needs some prime considerations to be taken to ensure expected behavior.

Advanced Language Capabilities with Multi-modal Interactions

Agents must possess advanced Natural Language Understanding (NLU), Generation (NLG), and contextual awareness, and we have a wide choice of LLMs from open source to closed source ones available as an API, which can empower the agents with their strong linguistic prowess. This foundational capability ensures agents can comprehend and generate human-like responses, adapting to the nuances of language and context. Additionally, with Multi-modal Language models, agents can support multimodal interactions, including text, voice, and visual inputs, bringing enhanced capabilities in the overall experience.

Decision-Making and Creativity

Enhanced decision-making and creativity are vital. Agents should extract information efficiently and handle ambiguities in language, fostering innovative solutions to complex problems and the capability to use the right tool from the available set of tools.

Cross-Domain Knowledge Integration

Integrating knowledge across various domains enables agents to provide comprehensive and accurate responses, enhancing their utility across different sectors and use cases. Different kinds of Relational, Vector, and Graph databases are available to efficiently store, retrieve, and provide the required contextual knowledge to power the agents.

Personalization and Multilingual Support

Personalization and customization are core features of LLM-enabled agents, as they can tailor interactions to individual user preferences, histories, and contexts, fostering deeper connections and enhancing user satisfaction. Multilingual support provided by LLMs ensures global accessibility, breaking down language barriers and making digital services more inclusive for users worldwide.

Ethical Considerations

Ethical and responsible decision-making are paramount in LLM integration, guiding agents to operate within ethical boundaries, respect user privacy, and promote trust.

|Implementation Strategy

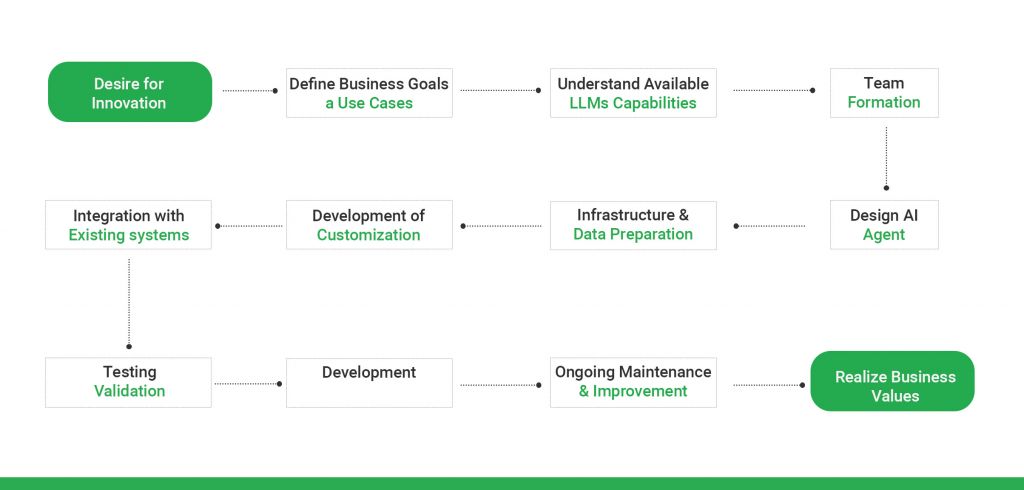

- Initial Steps

The journey begins with a clear desire for innovation, followed by defining specific business goals and understanding the capabilities of current LLMs. This stage sets a solid foundation for the subsequent steps.

- Team and Development

Forming a dedicated team with diverse skills is crucial. The design phase involves the technical development of the AI, focusing on learning algorithms and decision-making processes. Preparing the necessary infrastructure and customizing the AI to fit specific business needs are key components.

- Integration and Deployment

Seamless integration with existing systems ensures that the AI works harmoniously within the current business ecosystem. Rigorous testing and validation precede the deployment phase, where the AI system becomes operational.

- Continuous Improvement

Post-deployment, ongoing maintenance, and updates are vital for adapting to user feedback and evolving requirements, ensuring the AI remains effective, improves over time, and helps the enterprise realize the business value from the autonomous agents.

|Autonomous Agents: What next?

As we stand on the brink of a transformative era with autonomous agents, it’s imperative to consider the trajectory of these intelligent systems and their integration into our digital ecosystem. The future of autonomous agents is not just an extension of current capabilities but a comprehensive evolution towards more sophisticated, intuitive, and adaptive systems that seamlessly integrate into various domains and tasks, enhancing business automation and human efficiency.

Informed decision-making in the agent implementation ecosystem:

The journey ahead requires a deep understanding of the Agent Implementation Ecosystem. Businesses must gain insights into the intricate web of technologies, frameworks, and methodologies that underpin autonomous agents. This understanding is crucial for making informed decisions that align with specific business requirements and goals. As we navigate through this ecosystem, the emphasis on strategic alignment with business objectives will be paramount, ensuring that the deployment of autonomous agents is not just technologically advanced but also relevant and impactful.

Evaluation landscape and performance assessment:

The evaluation of autonomous agents will become more nuanced, focusing on their effectiveness, adaptability, and performance across various scenarios. The exploration of the Evaluation Ecosystem will introduce advanced methodologies and metrics tailored to assess the multifaceted performance of these agents. This rigorous evaluation will ensure that the agents not only meet the intended objectives but also adapt to evolving challenges, driving optimal performance in dynamic environments.

Tailored development for enhanced business automation:

The future will witness the development of agents that are not just generic solutions but are meticulously tailored to specific domains and tasks. This customization will be key to unlocking the full potential of autonomous agents, allowing them to foster automation across diverse business processes. By understanding the unique challenges and requirements of different domains, these agents will be engineered to enhance human efficiency, streamline operations, and provide innovative solutions to complex problems.

As we look ahead, the path for autonomous agents is clear—evolution towards more integrated, intelligent, and impactful systems that not only understand the tasks at hand but also the broader business and human context. The focus will be on creating systems that are not just autonomous in operation but also in learning, adapting, and evolving to meet the ever-changing landscape of business and societal needs.

|Conclusion

The transition into LLM-powered agents represents a transformative era in data science and technology, ushering in a new paradigm where the synergy between human intelligence and AI capabilities broadens the horizon of possibilities. As we navigate this new landscape, the emphasis on creativity, strategic thinking, and ethical considerations becomes increasingly crucial, ensuring that the advancements in AI not only enhance operational efficiencies but also contribute positively to societal progress. The journey ahead is filled with opportunities for innovation, collaboration, and growth, marking an exciting chapter in the ongoing evolution of data science and AI.

Watch our last webinar on “Maximising Synergy with Autonomous Agents using RAG with LLMs” for a better understanding on LLM-powered agents.