Getting your Trinity Audio player ready...

|

Caching is one of the standard and proven technique to improve the turnaround time for a given service or application. In traditional application development, we have different patterns to follow to build, manage and serve response from cache. In the context of Microservices, some of those patterns are not so relevant and demands us to be more creative. This blog talks about different caching types and their pros & cons and recommended caching type for Microservices environment.

Why caching

It is anonymous across design community that at least few of the services in any enterprise application needs caching enabled, to ensure a better turnaround. Caching is a technique where data gets returned directly from service, rather than making a call to another application (can be a database or another service) or recomputing the value which has already computed once. We can reap very good performance benefits, if we apply right caching techniques for the right data in a given context.

Caching types

- Based on the approach we follow to warm the cache, we have two types of caches:

- Preloaded cache: Load the data in cache before the start of service and serve data from cache from the first request.

- Lazy loaded cache: Warm the cache as data is being requested i.e. first request for a specific data will hit the server or compute, then from next request onwards, data will be served from cache.

- Based on where do you maintain the cached data, we have two types of caches:

- Inservice cache: Cache stays with in the memory of service instance.

- Distributed cache: Cache is stored outside the service instance but accessible to all the service instances of given service.

Challenges with Microservices environment

Microservices are inherently designed and expected to scale with ease.

| Cache Type | Inservice Cache | Distributed Cache |

|---|---|---|

| Preloaded Cache | Pros:

Cons:

|

Pros:

Cons:

|

| Lazy Loaded Cache | Pros:

Cons:

|

Pros:

Cons:

|

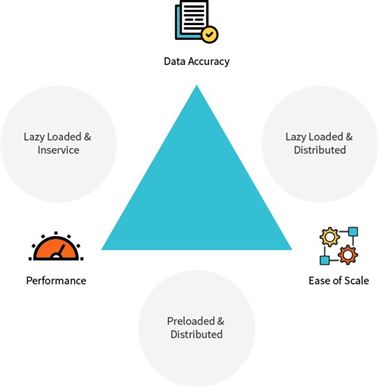

Cache Triangle

Summary

As part of this article, I tried to cover different aspects of caching, pros & cons of each approach. I would recommend distributed and lazy loaded caching for Microservices environment, as it will help us to bring the right balance among three core aspects of caching. With the advent of public cloud, managing distributed cache is relatively easy. If we choose lazy loading, performance for the initial requests may not be great and if that becomes serious concern then we can handle that with instances of high I/O throughput and/or computing power or by optimizing certain part of functional logic. With this article I hope you gained certain knowledge which will help you to take an informed decision while choosing caching technique in your Microservices environment.