Why should you go serverless (and why Red Hat OpenShift is a great choice!)

Serverless – What is it?

What do we mean when we say go serverless?

When you go serverless, you are basically building and running applications without having the need for any deep insight into the application’s underlying infrastructure. A deployment model that allows you to do this is called Serverless. Ultimately the goal is to help developers focus on writing code and understanding where to run it, as opposed to thinking about the infrastructure.

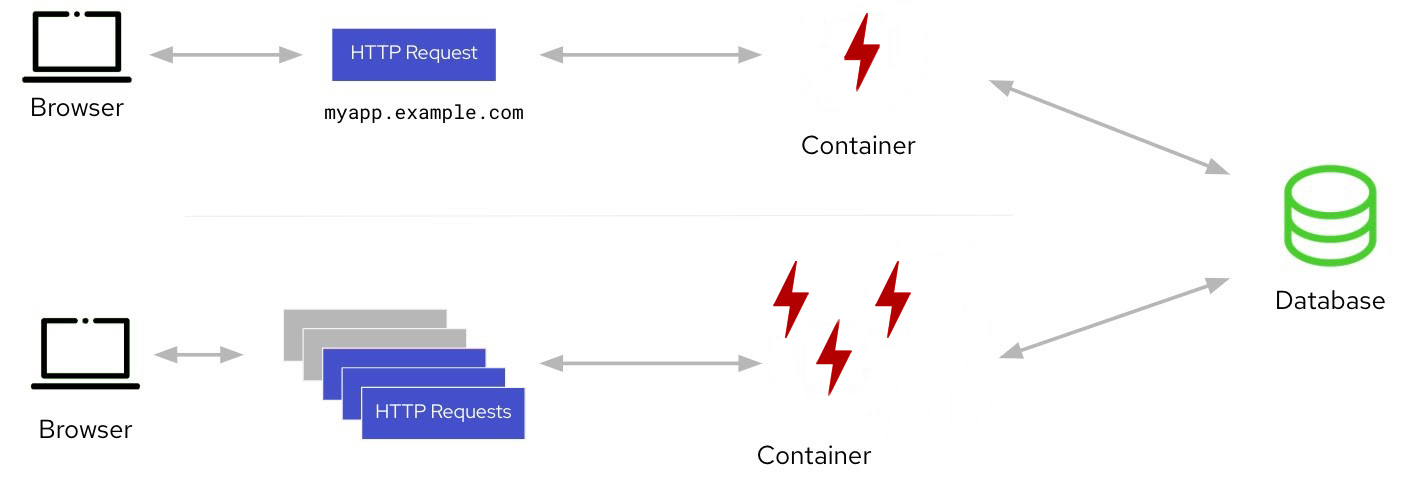

Serverless deployment also allows you to run code and manage data and resources only when necessary. So when the application isn’t being used, it can be idle. A benefit of a serverless application is its scalability. When there is an incoming demand, it can automatically scale up, and then scale to zero once the demand is met.

Red Hat OpenShift Serverless is a service based on the open-source Knative project. It provides an enterprise-grade serverless platform that brings portability and consistency across hybrid and multi-cloud environments. It allows developers to build cloud-native, source-centric applications using Kubernetes’ various custom resource definitions (CRDs) and associated controllers.

Why go serverless?

A serverless application is separated for its underlying infrastructure, thus allowing organizations to innovate faster. Regardless of their programming language, since applications are packages as OCI compliant containers, they can run anywhere.

Using upstream Knative, it is possible to execute any container in a serverless model. Knative (pronounced “Kay – Native”) is a Kubernetes extension that provides a set of components for deploying, executing, and managing modern serverless applications. By using Knative, you can deploy and execute your serverless applications on any Kubernetes platform, reducing the risk of vendor lock-in.

Knative has the following features:

- It can scale to zero. Knative allows you to save money and resources because it can scale to zero. So when your pod experiences no traffic, there will be nothing running, meaning no memory and less CPU.

- Knative can scale from zero. This means that for whatever reason you experience a traffic spike, Knative has auto-scaling capabilities, allowing you to scale up in an instant.

- It allows for revisions and configurations. Knative allows you to configure your deployments. Hence, if you’re wanting to make Canary deployments or Green/Blue deployments, you can do so.

- Knative allows for In-cluster image building. Knative uses Tekton. This powerful framework is based on Knative and is used to create continuous integration and continuous delivery systems. You can use Tekton to deploy this system across multiple hybrid clouds to any Kubernetes cluster.

- You can use Knative for traffic splitting. Knative gives you the ability to choose the amount of traffic sent to reach revision and even split the traffic between them.

- You can use Knative’s eventing system to trigger workloads on individual events.

A serverless container, unlike Functions, can be constructed around any runtime. Your code can be put in a container with the necessary runtimes, and the serverless capability will launch the application containers when an event triggers them. When not in use, the containers can also scale to zero, consuming no resources. All events from your own applications, cloud services from different providers, Software as a Service (SaaS) platforms, and other services are utilized to start applications.

Any of these event triggers can be used to start the program on demand. This structure enables you to break out your application into distinct containers. Once the distinct containers are created let the application logic activate each one, utilizing incoming events to choose when to run it.

Pay-as-you-go

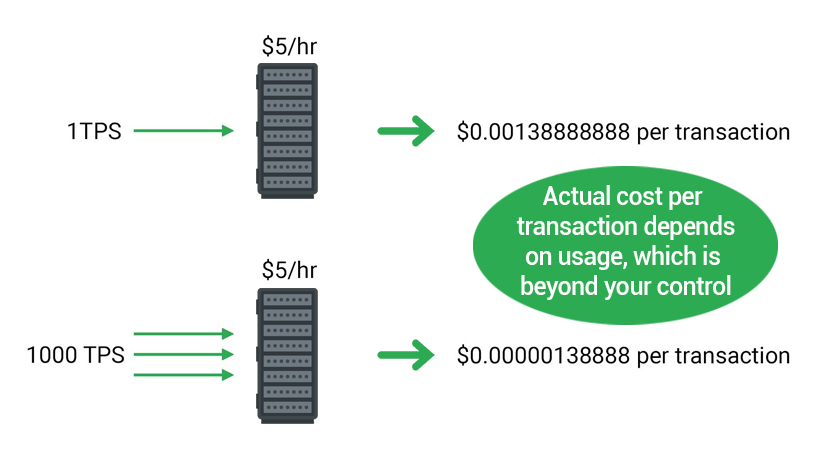

Serverless is more cost-effective than renting or purchasing a fixed number of servers, which generally involves a significant time of underutilization. It can even be more cost-effective than provisioning an autoscaling group, because of more effective bin-packing of the underlying machine resources. This can be named as pay-as-you-go computing as you are charged based upon the time and memory allocated to run your code; without associated fees for inactive time. Immediate cost benefits are related to the lack of operating costs, including licenses, installation, dependencies, and personnel costs for maintenance, support, or patching. The absence of staff cost is a benefit that applies extensively to cloud computing.

Scalability

Imagine if a shopping app could somehow add and withdraw delivery trucks at need, increasing the size of its fleet as the number of orders spikes (say, just before Christmas) and decreasing its fleet at times when fewer deliveries are necessary. That’s essentially what serverless applications are capable of.

Integration

Serverless is widely used, Applications built on serverless infrastructure will scale up automatically as the usage grows or lessens. If an application needs to be run in multiple instances, the vendor’s servers will automatically start-up, run, and end them as they are needed, often using containers or clusters. This will be event-based or trigger-based.

The application will start up faster and quicker if it has been run recently. As a result, a serverless application will be able to handle multiple users requests just as it can process a single request from a single user.

Ease of management

The serverless architecture helps the user:

- To reduce infrastructure management & maintenance.

- To build/deploy a scalable environment to match the need of the application.

- To enhance security with encrypted data and encrypted service endpoints.

- To efficiently onboard a new B2B partner quickly

The role of Red Hat Openshift serverless in resolving your business problem

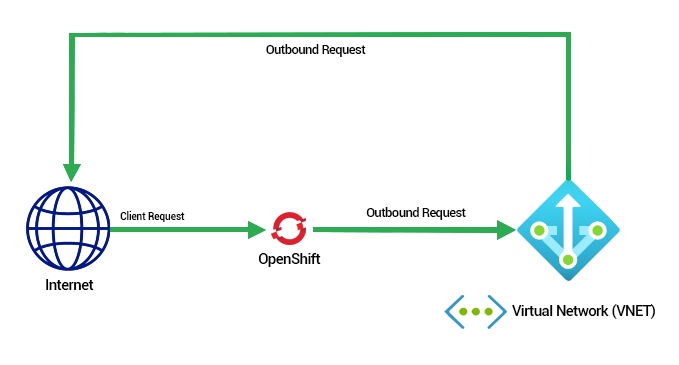

Red Hat OpenShift Serverless allows developers to create, deploy, and execute event-driven applications that start based on an event trigger, scale up resources as needed, and then scale down after a resource burst. Red Hat OpenShift Serverless applications will now be able to run anywhere Red Hat OpenShift is installed, including on-premises, across multiple public cloud locations, and at the edge, using the exact interface.

The OpenShift UI has everything you need to manage all elements of serverless container deployment. With different ways to adjust the event parameters, developers may easily determine which events are driving the launch of their containerized apps.

You may choose and control the triggers that start and scale containers, as well as scale them back to zero when they’re not in use, thanks to a wide range of available event sources.

Serverless capabilities are completely integrated into the Red Hat OpenShift platform, making it easier for operations teams to manage serverless deployments while also making event-driven deployments easier for developers. Operations teams can maximize availability across a hybrid set of computing targets by aligning resource allocation to application consumption. By emphasizing the incoming event sources that are specified to start individual services, developers may easily discover how their code is activated.

As a result, Red Hat OpenShift thus allows you to build a framework for providing operational capabilities to application deployment.

Red Hat OpenShift is a great tool that will help you build serverless applications.

To that effect, through this blog, we saw how going serverless will help organizations to grow faster. Serverless applications are separated from their underlying architecture, thus allowing organizations to innovate faster.

A serverless application on Red Hat OpenShift can run on any system that has OpenShift installed. This includes on-premise, at the edge, systems using the same interfaces, and across multiple public cloud locations. The OpenShift UI gives you the capability to manage all elements of serverless container deployment. Since serverless capabilities are fully integrated into its platform, you can bring operational capabilities to application deployment in Red Hat OpenShift.