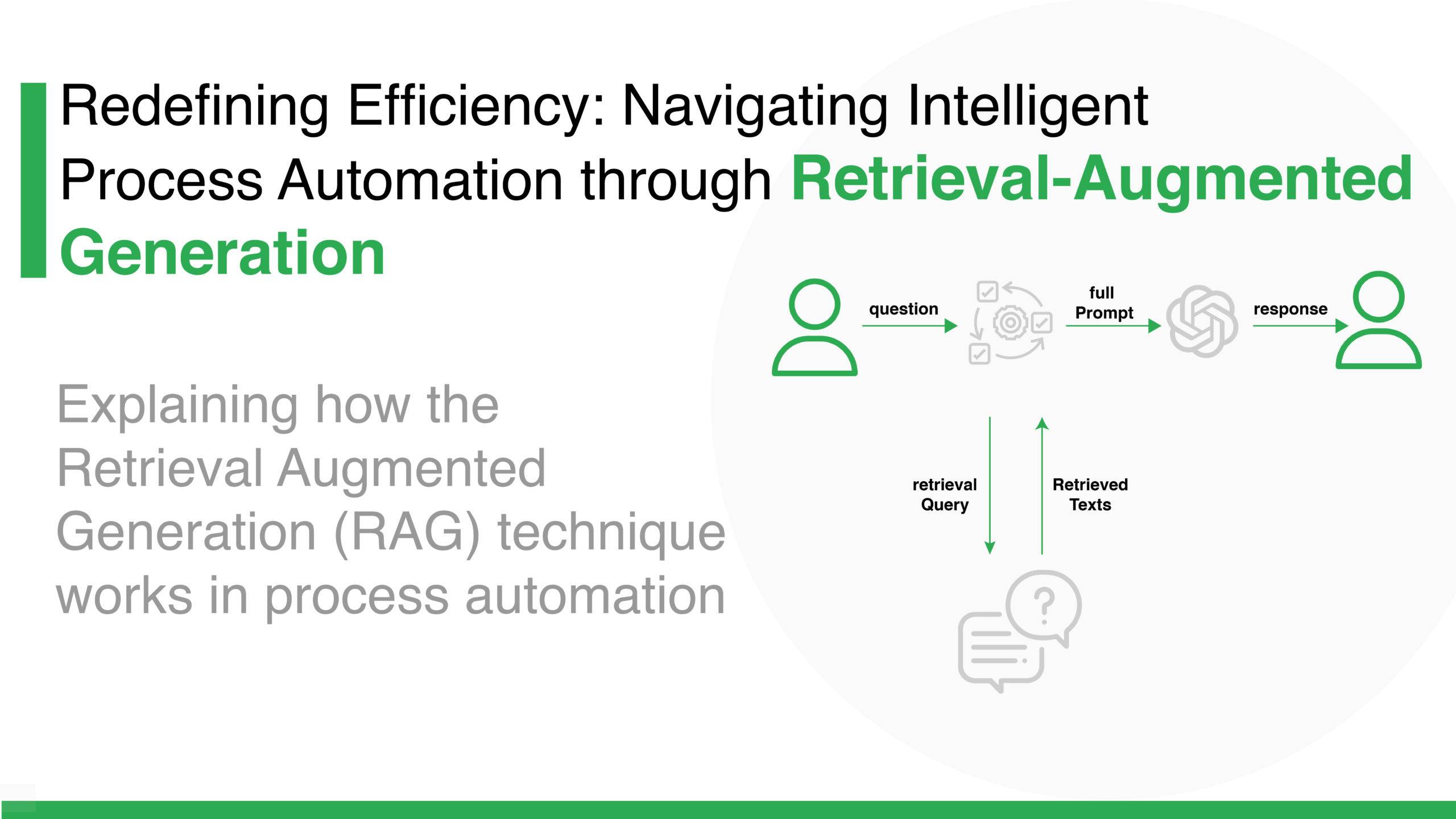

Redefining Efficiency: Navigating Intelligent Process Automation through Retrieval-Augmented Generation

Retrieval Augmented Generation (RAG) represents a transformative change in how AI can interact with information and perform tasks. By combining the strengths of the powerful large language models augmented with external context-based knowledge retrieval, RAG opens up a new world of possibilities for process automation across various industries. The essence of being able to effectively apply this approach is the key to solving a real business problem and fitting it into an Enterprise use case.

Retrieval Augmented Generation (RAG) represents a transformative change in how AI can interact with information and perform tasks. By combining the strengths of the powerful large language models augmented with external context-based knowledge retrieval, RAG opens up a new world of possibilities for process automation across various industries. The essence of being able to effectively apply this approach is the key to solving a real business problem and fitting it into an Enterprise use case.

In this blog, we will delve into the different aspects of RAG and understand what could be an effective implementation of this approach. It is important to consider the aspects of implementing the RAG approach in a way to be able to retrieve the correct data to return appropriate responses. Another essential aspect is to ensure a sturdy mechanism to evaluate the correctness of the response. This should be measured by evaluating the different components of the RAG pipeline using frameworks like RAGAS and Langsmith, and metrics like context_relevancy and context_recall which represent the measure of the performance of your retrieval system.

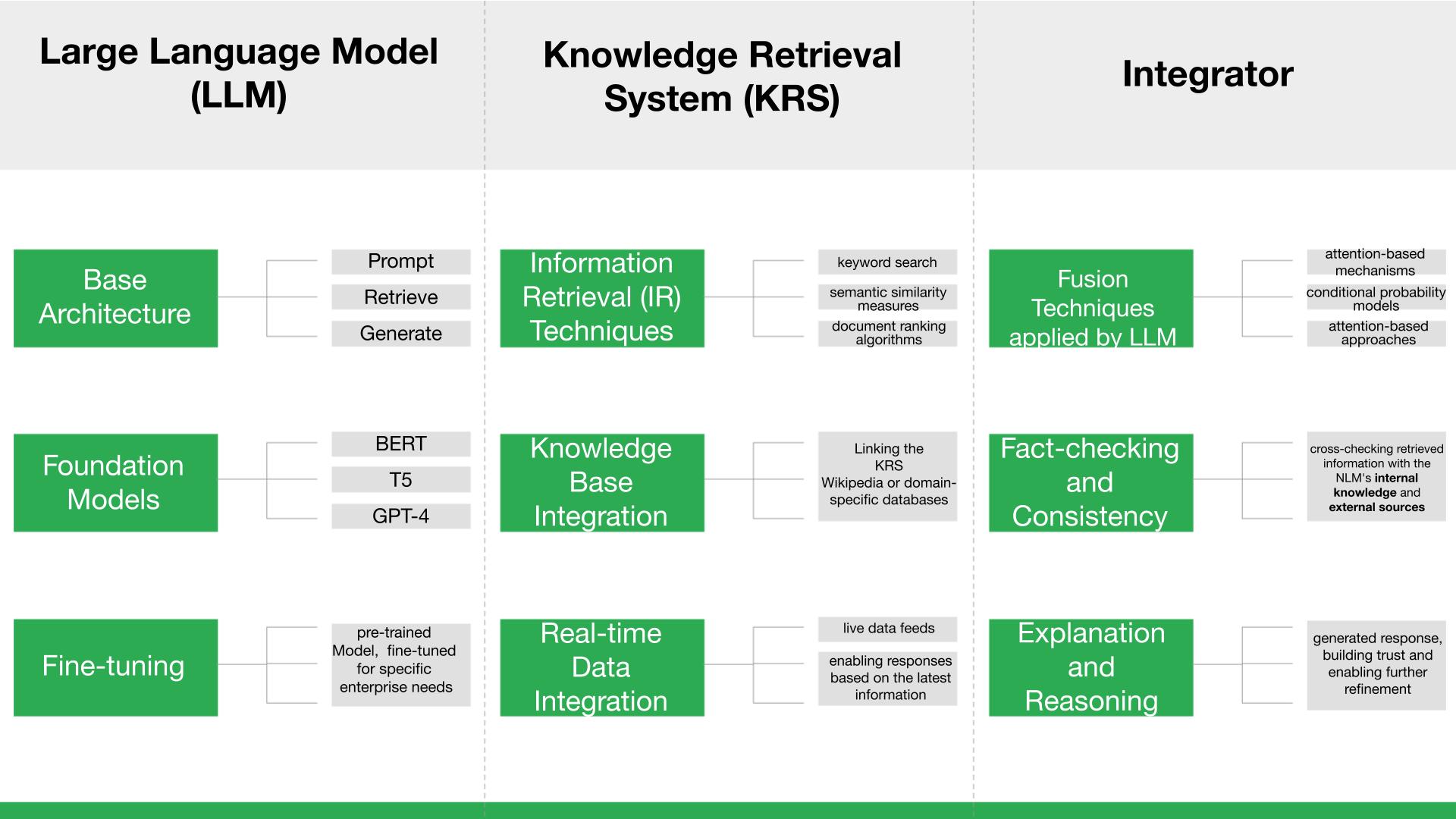

|A deeper look inside the RAG engine beyond basic components

While the main components of RAG – the large language model, knowledge retrieval system, and integrator – provide a basic framework, understanding their nuanced functionalities adds layers of complexity and reveals the true power of this architecture.

- Large Language Model (LLM): The Large Language model is the heart of this approach by making use of the LLM along with the additional context provided by Retrieval. The LLM is powered by

- Transformer Architecture: This allows the LLM to focus on relevant parts of the prompt and retrieved information, generating contextually aware responses.

- Pre-trained Models: The choice of model could be the popular choices including BERT, T5, Openai models like GPT4 , each bringing their strengths and weaknesses. The choice of LLM depends on the specific task and desired qualities of the output.

- Fine-tuning: For some cases, we may look at adapting pre-trained models on task-specific data by using fine-tuning. This can enhance the LLM’s understanding of the domain and improve its response accuracy.

- Knowledge Retrieval System (KRS):

- Information Retrieval (IR) Techniques: These include keyword search, semantic similarity measures, and document ranking algorithms. RAG often employs a combination of techniques to ensure comprehensive and relevant retrieval.

- Knowledge Base Integration: Linking the KRS to specific knowledge bases, like Wikipedia or domain-specific databases, Knowledge graphs, Document repositories, etc., which further enhances the retrieved information’s quality and relevance.

- Real-time Data Integration: Advanced RAG systems can integrate live data feeds, enabling responses based on the latest information, particularly crucial for time-sensitive tasks.

- Integrator:

- Fusion Techniques: Combining the LLM’s initial response and the retrieved information requires sophisticated fusion techniques. These may involve attention-based mechanisms, conditional probability models, or rule-based approaches.

- Fact-checking and Consistency: The integrator ensures output coherence and factual accuracy by cross-checking retrieved information with the LLM’s internal knowledge and external sources.

- Explanation and Reasoning: Integrating explainable AI techniques into the integrator allows users to understand the reasoning behind the generated response, building trust and enabling further refinement.

- Additionally, we can include a postprocessing layer to further control the accuracy of responses by additional evaluation and re-generation.

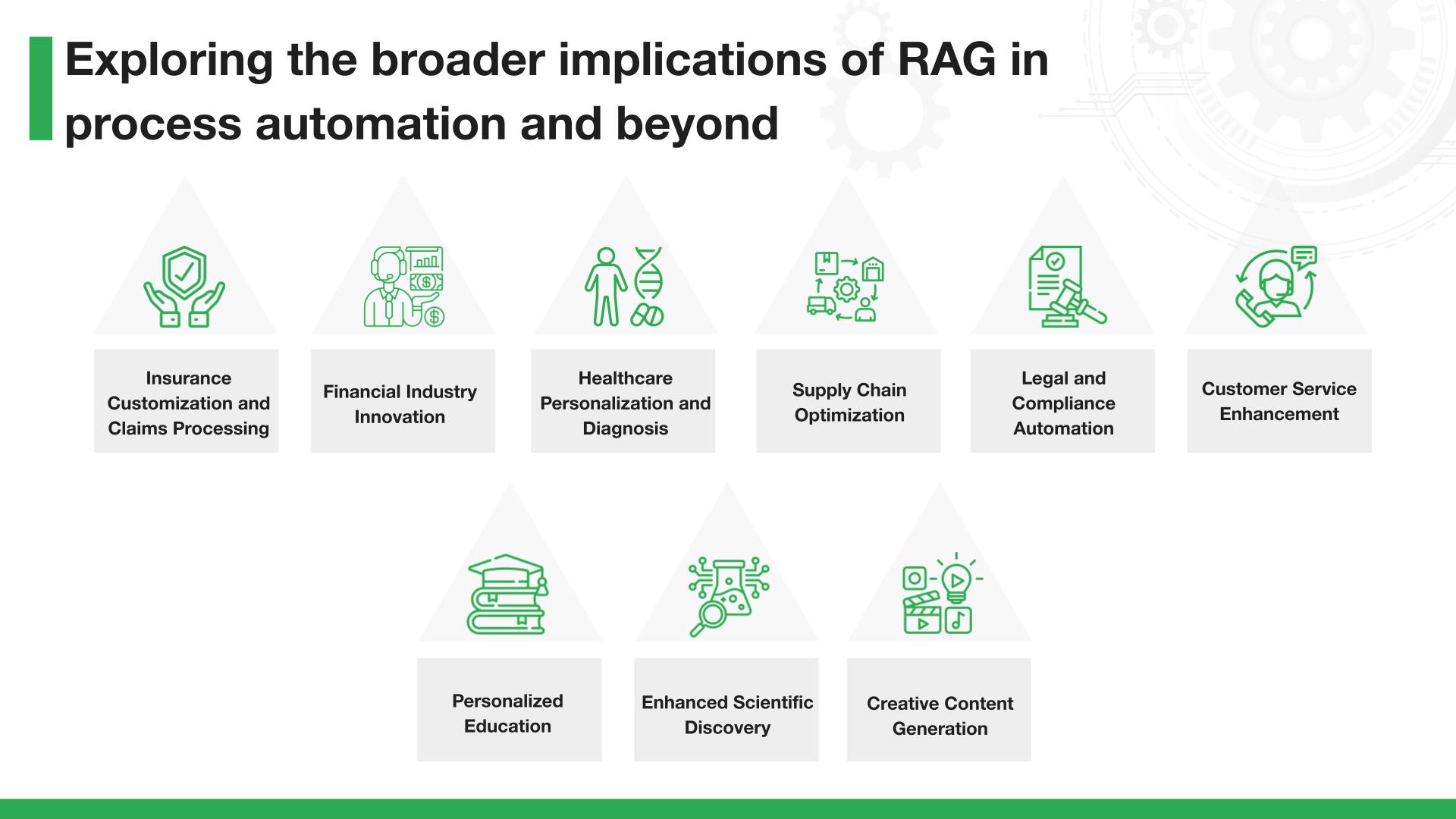

Process automation is one of the most immediate and impactful applications of RAG and LLMs by combining the power of LLMs with the domain knowledge creating task-specific special expert agents who can act and perform on behalf of human agents bringing efficiency to their throughput. Some of the domain-specific applications include:

Process automation is one of the most immediate and impactful applications of RAG and LLMs by combining the power of LLMs with the domain knowledge creating task-specific special expert agents who can act and perform on behalf of human agents bringing efficiency to their throughput. Some of the domain-specific applications include:

- Insurance Customization and Claims Processing: RAG-enabled Agents could revolutionize the insurance industry by personalizing policies based on individual risk profiles extracted from a wide range of data sources. It could also streamline claims processing by quickly retrieving and analyzing past claims, policy details, and customer interactions to make informed decisions.

- Financial Industry Innovation: In finance, RAG can be utilized for real-time market analysis, fraud detection, and personalized financial advice. It could analyze vast arrays of financial data to identify trends, provide investment insights, or even predict market shifts, offering substantial benefits in trading, risk management, and customer service.

- Healthcare Personalization and Diagnosis: RAG could transform healthcare by providing personalized treatment plans based on a patient’s medical history and the latest medical research. It could assist in diagnosing diseases by comparing symptoms and medical images with a vast database of clinical cases, enhancing the accuracy and speed of diagnosis.

- Supply Chain Optimization: In the realm of logistics and supply chain, RAG can optimize routes and inventory management by analyzing real-time data streams from various sources, including weather, traffic, and purchasing trends, to ensure efficient and timely delivery of goods.

- Legal and Compliance Automation: RAG powered by LLMs can aid legal professionals by quickly retrieving relevant case laws, precedents, and regulations, making the preparation of legal documents and compliance checks more efficient.

- Customer Service Enhancement: By integrating RAG in customer service, businesses can provide more accurate, personalized, and efficient responses. RAG can analyze customer data, preferences, and past interactions to tailor conversations and solve queries more effectively.

- Personalized Education: Imagine intelligent tutoring systems that tailor learning paths based on individual student needs, dynamically fetching relevant materials and adjusting explanations based on the student’s comprehension.

- Enhanced Scientific Discovery: Research assistants powered by RAG could analyze vast datasets of scientific literature, uncovering hidden patterns and generating new hypotheses, accelerating scientific breakthroughs.

- Creative Content Generation: Artists and writers could collaborate with RAG systems, leveraging external data and the model’s understanding of language to generate novel and insightful forms of creative expression.

While all these use cases demonstrate the potential of these powerful agents which effectively are like co-pilots of the Human agent speeding up their tasks and providing first-hand information which can be reviewed and taken forward by the Human.

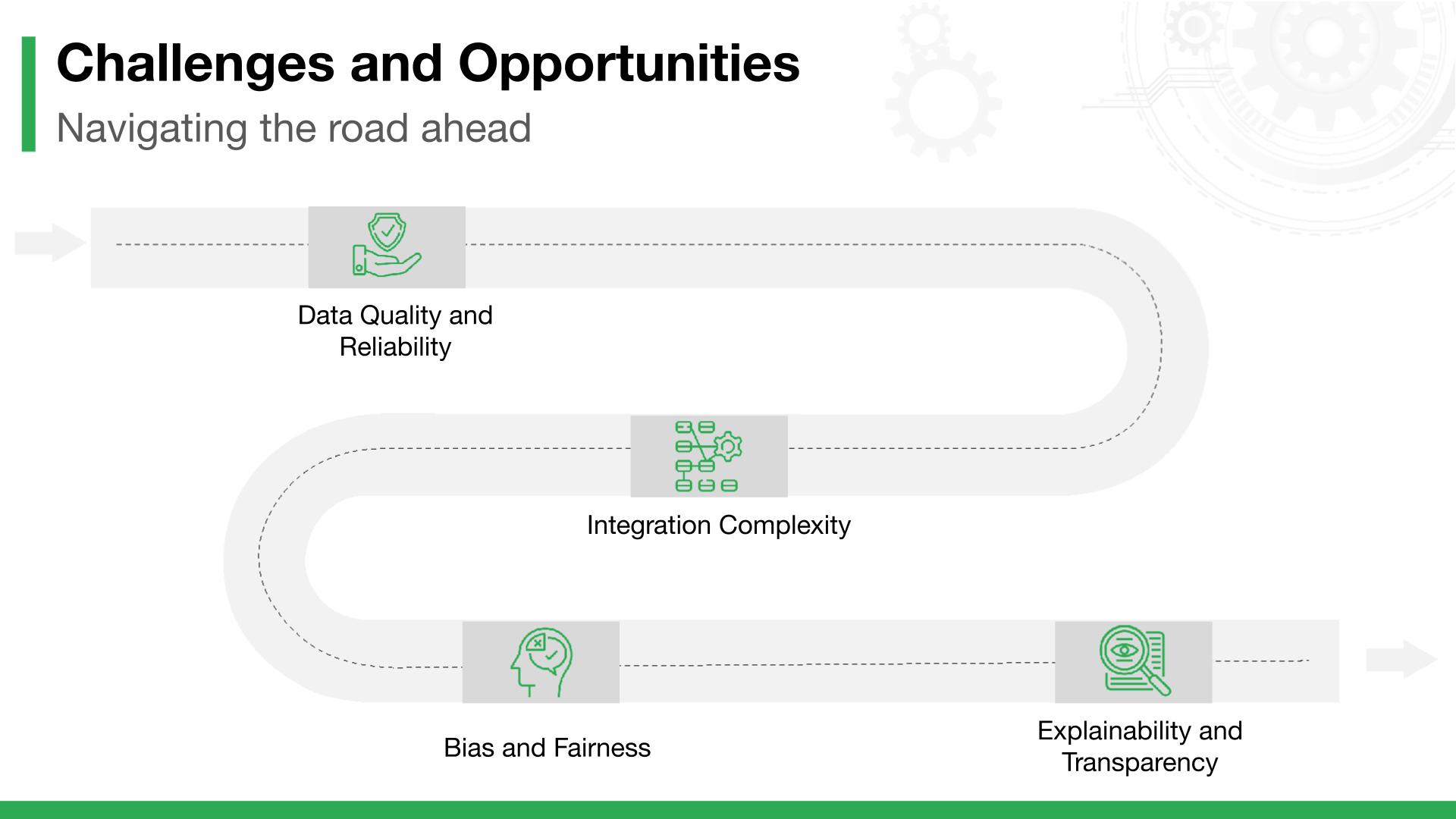

|Understanding challenges and opportunities while navigating the road ahead

While RAG presents significant opportunities, addressing its challenges and ethical considerations is crucial. Here’s a closer look at these challenges along with advanced techniques being developed to counter them:

While RAG presents significant opportunities, addressing its challenges and ethical considerations is crucial. Here’s a closer look at these challenges along with advanced techniques being developed to counter them:

Data Quality and Reliability:

- Challenges: Ensuring that the information retrieved by RAG systems is accurate and reliable is essential. Misinformation or poor-quality data can lead to incorrect conclusions.

- Advanced Techniques: Implementing robust filtering mechanisms, using verified and authoritative data sources, and continual updating of knowledge bases help maintain data quality.

- Integration Complexity Challenges: Integrating RAG systems with existing databases and workflows requires managing various data formats and ensuring efficient communication and processing.

- Advanced Techniques: Development of more adaptable and flexible integration frameworks like Llama-index, standardization of data formats, and the use of APIs for easier interfacing between different systems can help with better results.

Bias and Fairness:

- Challenges: Like all AI systems, RAG can perpetuate and amplify biases present in the data or algorithms, affecting fairness and representation.

- Advanced Techniques: Continuous monitoring with bias detection methodologies and updating of feedback is essential to ensure fairness over time.

Explainability and Transparency:

- Challenges: Understanding the decision-making process of RAG systems is essential for trust and accountability, especially in critical applications.

- Advanced Techniques: Explainable AI (XAI) methods are being actively researched and developed to make the workings of AI systems more transparent and understandable. This includes techniques for visualizing and interpreting model decisions and developing models that inherently provide more interpretable results.

Despite these challenges, the opportunities presented by RAG are truly game-changing. Imagine a future where AI systems don’t just follow pre-programmed rules, but can dynamically adapt and learn based on real-time information access and reasoning. This empowers them to tackle complex tasks, make informed decisions, and even co-create with humans in new and exciting ways.

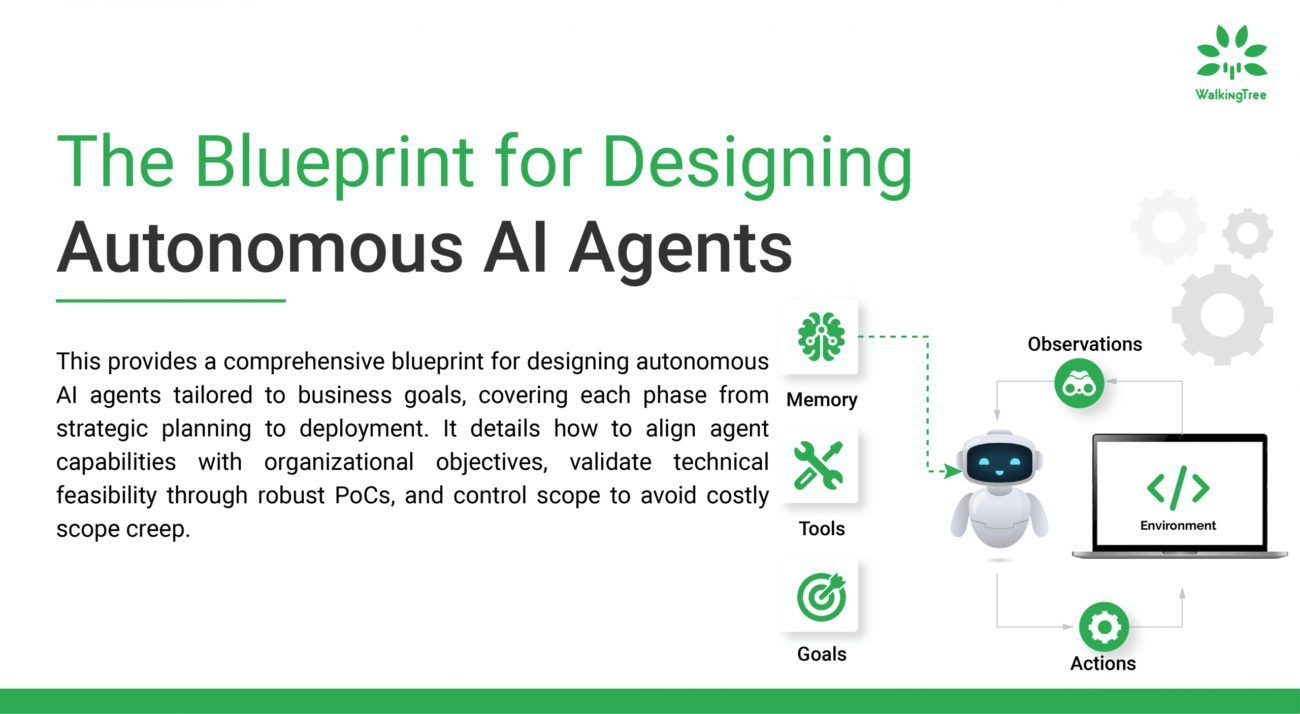

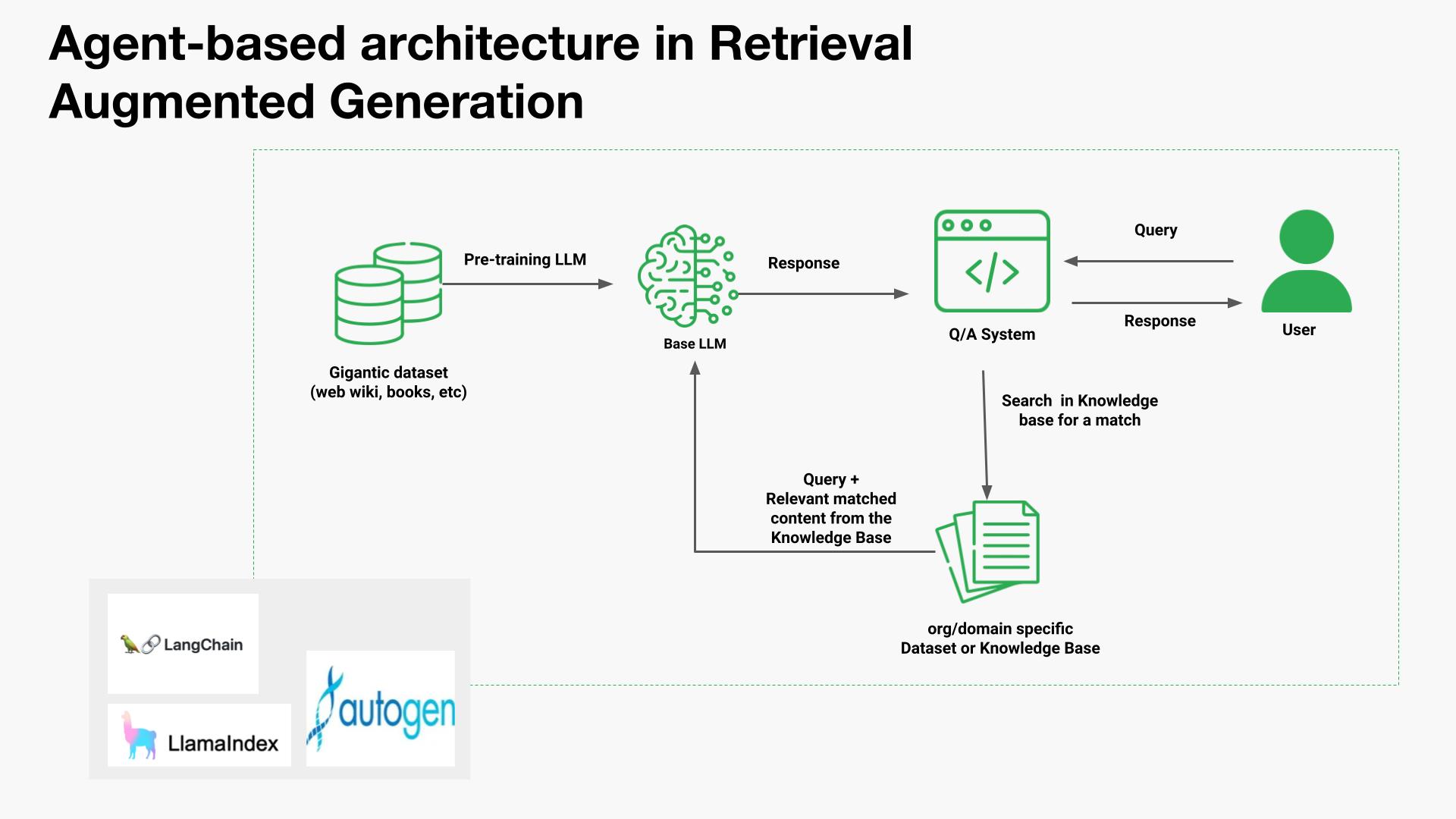

Agents, integral to Langchain and LlamaIndex, have been a part of the landscape almost since the inception of the first Large Language Model (LLM) APIs. These agents are designed to endow an LLM capable of reasoning with a diverse toolkit and a specific task. The toolkit can range from deterministic functions such as code functions or external APIs to other agents, a concept that inspired the name “LangChain” through the idea of chaining LLMs together.

While agents are a substantial topic in themselves, too broad to cover comprehensively in a brief overview of RAG, it’s worth noting the agent-based approach to multi-document retrieval. A notable advancement in this area includes the introduction of OpenAI Assistants, a feature highlighted at a recent OpenAI developer conference. OpenAI Assistants enhance LLMs with a suite of tools previously only available in open-source formats, including chat history, knowledge storage, document uploading interfaces, and significantly, a function calling API. This functionality transforms natural language into API calls, connecting to external tools or database queries.

In LlamaIndex, the OpenAIAgent class combines this sophisticated logic with the ChatEngine and QueryEngine classes. This fusion enables knowledge-based and context-aware chatting capabilities, alongside the ability to make multiple OpenAI function calls within a single conversation turn, exemplifying smart agent behavior.

Focusing on the Multi-Document Agents setup, this complex arrangement involves initializing an OpenAIAgent for each document. These agents are tasked with document summarization and the traditional Question and Answer (QA) mechanics. A master agent then coordinates the queries, directing them to the appropriate document agents and synthesizing the final response.

Each document agent is equipped with two primary tools: a vector store index and a summary index. It utilizes these tools based on the query directed to it. Conversely, for the master agent, all document agents collectively function as tools.

This architecture represents a refined approach to RAG, allowing for nuanced routing decisions at every agent level. The advantage of this system lies in its ability to collate and compare different solutions or entities described across various documents and summaries, as well as maintaining the standard single document summarization and QA mechanics. This versatility makes it particularly suited to complex interactions involving a collection of documents, covering a wide range of potential chat-with-document scenarios.

The power of Collaboration unites humans and AI in the RAG era

It’s important to remember that any machine automation brought about using RAG and LLMs is not about replacing human intelligence. Instead, it represents a shift towards collaborative intelligence, where humans and AI work together. Humans can define the tasks, curate knowledge bases, and evaluate the outputs of RAG systems, ensuring that they remain aligned with human values and ethical considerations.

By integrating human intelligence with the vast knowledge and learning capabilities of machines, opens up a world of possibilities for process automation, education, scientific discovery, and even creative expression. As we navigate the challenges and embrace the opportunities, we can shape a future where AI empowers us to achieve unprecedented levels of understanding, efficiency, and collaboration.