Blogs

Hadoop Ecosystem

Problem Statement:

When we start learning Hadoop technology, we come across many components in Hadoop ecosystem. It would be of great interest for all of us to know the what specific purpose each component will serve within Hadoop ecosystem.

Scope of the Article:

This article talks describes the use of different components in the hadoop ecosystem

Details:

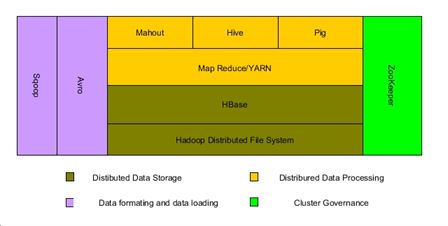

Following diagram depicts the general ecosystem of Hadoop. Not all components are mandatory. Many times one component compliments other components.

Hadoop Distributed File System(HDFS):

HDFS is a distributed file system which distributes data across multiple servers with automatic recovery in case of any node failure. It is built with the concept of write once and read multiple times. It does not support multiple writers in one go, it allows the only writer at a time. Typical Hadoop instance can withstand petabytes of data with the power of this file system.

HBase:

Hbase is distributed column oriented database where as HDFS is the file system. But it is built on top of HDFS system. HBase does not support SQL, but it solves concurrent write limitation we have in HDFS. HBase is not a replacement for HDFS. HBase internally stores the data in HDFS format. If have need for concurrent writes in your big data solution then HBase can be used.

MapReduce:

MapReduce is a framework for distributed parallel data processing. It provides a programming model for large data processing. MapReduce programs can be written in Jave, Ruby, Python and C++. It has an inherent capability to run the programs in parallel across multiple nodes in a big data cluster. As processing has is distributed across multiple nodes we can expect better performance and throughput. MapReduce performs data processing in two stages i.e. map and reduce. The map will convert an input data in the intermediate data format which is basically and key value pair. Reduce will combine all the maps which share the common key and generates reduced set of key value pairs. It has two components i.e. Job tracker and task tracker. Job tracker acts like master and sends commands to slaves for a specific task. Task tracker will take care of the real execution of the task and report back to the job tracker

YARN:

YARN means ‘yet another resource negotiator’. Map reduce was rewritten to overcome the potential bottleneck of single job tracker in old mapreduce which has responsibilities of job scheduling and monitoring task progress. Now YARN divides that into those two responsibilities into two seperate deamons i.e. resource manager and application master. Existing mapreduce programs can work directly on YARN but some times we need make some changes.

Avro:

Avro is data serialization format which brings data interoperability among mutlple components of apache hadoop. Most of the components in hadoop started supporting Avro data format. It works with basic premise of data produced by component should be readily consumed by other component.

Avro has following features

-

- Rich data types.

- Fast and compact serialization

- Support many programming langguages like java, Python

Pig:

Pig is platform for big data analysis and processing. Then immediate question comes to our mind that map reduce is also serving same purpose then what other benefits pig is providing. Pig adds one more level abstraction in data processing and it makes writing and maintaining data processing jobs very easy. At the time of compilation, pig script will be converted into multiple map reduce programs and they will be executed as per the logic written in pig script. Pig has two pieces

-

- The lanuguage to write programs which is named as Pig Latin

- Execution environment where pig scripts will be executed

Pig can process tera bytes of data with half dozen lines of code.

Hive:

Hive is a dataware housing framework on top of Hadoop. Hive allows to write SQL like queries to process and analyze the big data stored in HDFS. Hive is primarly intended for the resources who want to process big data but does not have programming background around java or other related technologies. While execution hive scripts will be converted to series of mapreduce jobs.

Sqoop:

Sqoop is tool which can be used to transfer the data from relational database environments like oracle, mysql and postgresql into hadoop environment. It can transfter large amount of data into hadoop system. It can store the data in HDFS in arvo fromat.

Zookeeper:

Zookeeper is a distributed coordination and governing service for hadoop cluster. Zookeeper runs on multiple nodes in a cluster and in general hadoop nodes and zookeeper nodes will be same. Zookeeper can notify in case of any changes happened master or any of its child. In hadoop this will be useful to track if particular node is down and plan necessary communication protocol around node failure.

Mahout:

Mahout adds data mining and machine learning capabilities for big data. It can be used to build recommendation engine based on certain usage patterns of user.

Summary:

In this article we understood hadoop ecosystem and learned about different components and their primary purpose.

Great content is useful for all the candidates Hadoop training who want to kick start these career in Hadoop field.

Great Blog | I appreciate your work on Hadoop. It’s a great post. It’s such a wonderful read on Hadoop tutorial. Keep sharing such kind of worthy information.

Great content is useful for all the candidates Hadoop training who want to kick start these career in Automation Hadoop training field.

It’s really nice information to share here. Thanks for your blog, keep posting like this regularly. Thank you