LangServe Unveiled: Simplifying API Deployment with LangChain

The significance of efficiency and simplicity cannot be overstated in the fast-changing software development landscape. It is therefore not surprising that developers across the globe are always on the lookout for tools that will not only help in simplifying their workflows but also come in handy in enhancing the deployment of complex systems. Welcome to LangServe, the ultimate library-enhancing developers to deploy LangChain runnables and chains as REST APIs.

LangServe is an open-source library of LangChain that makes your process for creating API servers based on your chains easier. LangServe provides remote APIs for core LangChain Expression Language methods such as invoke, batch, and stream. Plus, it offers a client-friendly interface to most implementations for easy integration, just like any other runnable in LangChain.

It simplifies the process of deploying LangChain runnables and chains into easily accessible REST APIs. This incredibly powerful library doesn’t stop at mere integration but rather offers a very robust solution that’s intricately weaved with FastAPI to harness the data validation capabilities of Pydantic.

Consider your LangChain objects getting transformed into RESTful APIs with ease using LangServe, hence enabling possibilities for developers looking to efficiently deploy their solutions. Through integration with FastAPI, the experience is guaranteed to be seamlessly elegant along with management of data validation taken care of by Pydantic.

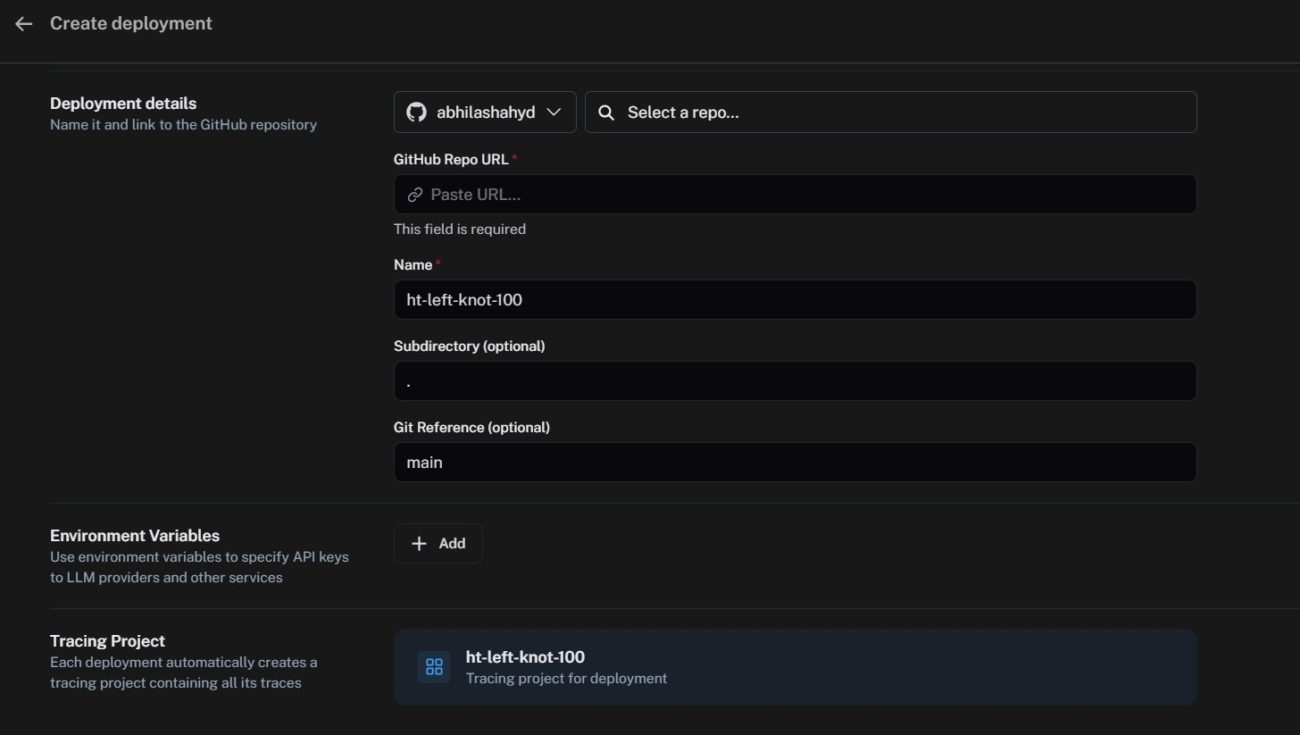

Hosted LangServe simplifies the hosting of LangServe applications for users and is an integral component of LangSmith’s fully managed SaaS offerings. Seamlessly operating, Hosted LangServe directly interfaces with your application code hosted on GitHub, builds it into a container, and deploys it onto the internet as a straightforward matter. Requirements to use Hosted LangServe is to have a GitHub repository which contains a LangServe application that includes a Dockerfile that can build the repository into a container. With these conditions met, deploying your application on Hosted LangServe becomes a streamlined one-click process, providing the users with a hassle-less hosting solution.

Hosted LangServe is currently available on the waitlist and I am thankful to the LangChain team for providing us with early access to this feature.

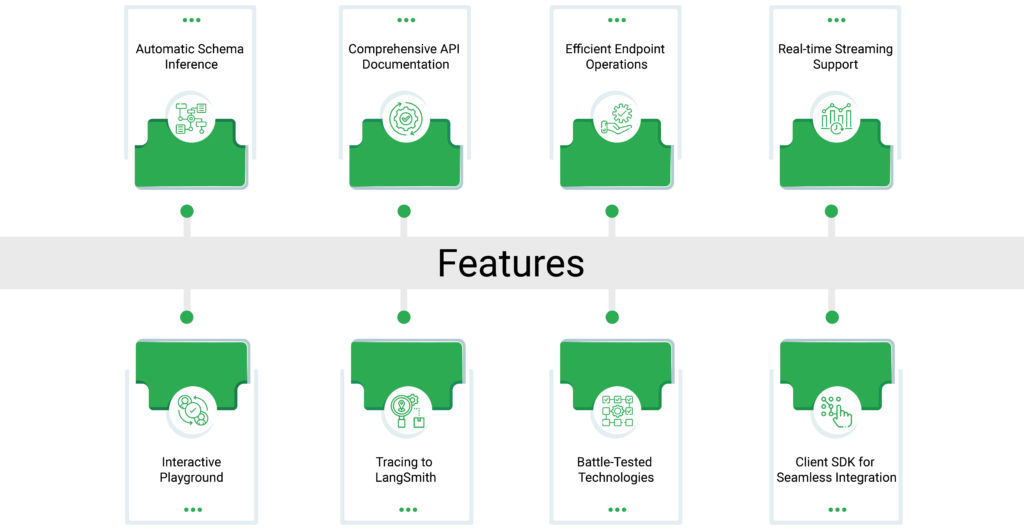

LangServe boasts a rich array of features that elevate it to the forefront of API deployment tools:

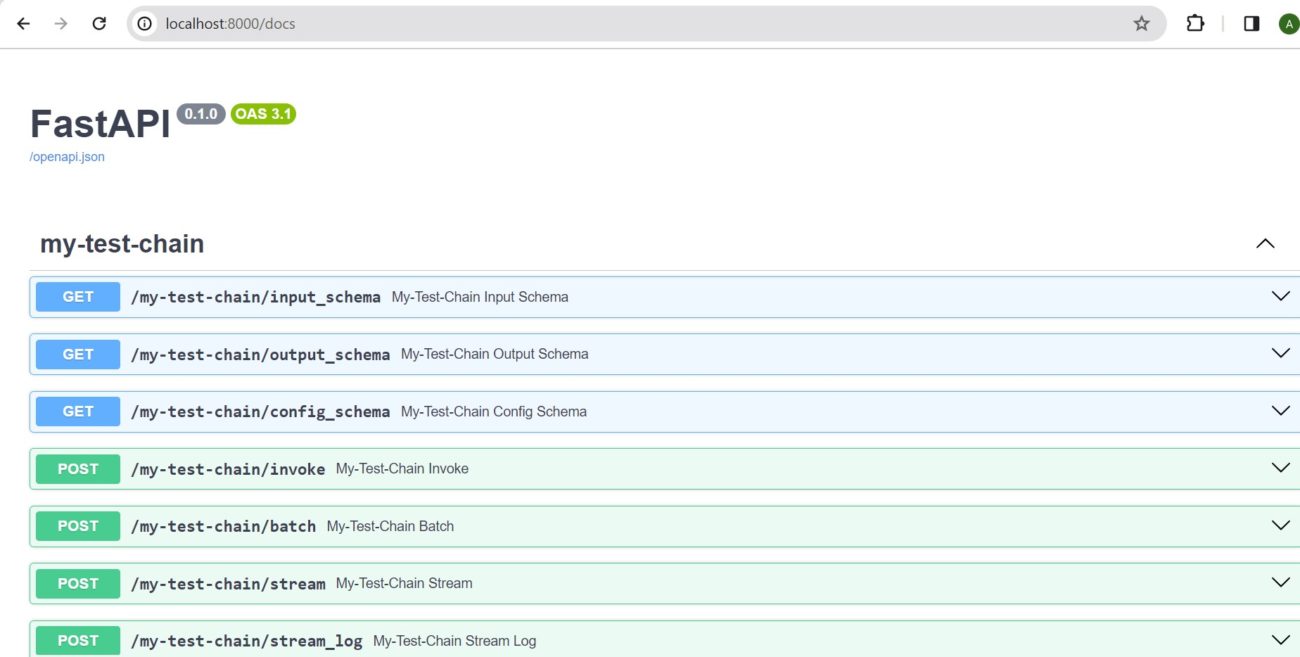

- Automatic Schema Inference: Input and output schemas are dynamically inferred from your LangChain object. Henceforth, every API call is rigorously enforced with the respective schemas keeping data consistency and integrity intact. Rich error messages ensure troubleshooting to be a cakewalk.

- Comprehensive API Documentation: Unlike just stopping at deployment, LangServe adds on by preparing an API documentation page alongside the articulation of JSONSchema and Swagger. This is to create a vivid view of the API articulation for efficient integration amongst developers.

- Efficient Endpoint Operations: The library presents three efficient endpoints, being /invoke/, /batch/ and /stream/ which actually handle several concurrent requests on a single server. This robust performance will ensure that your APIs scale seamlessly while growing with an increase in demand.

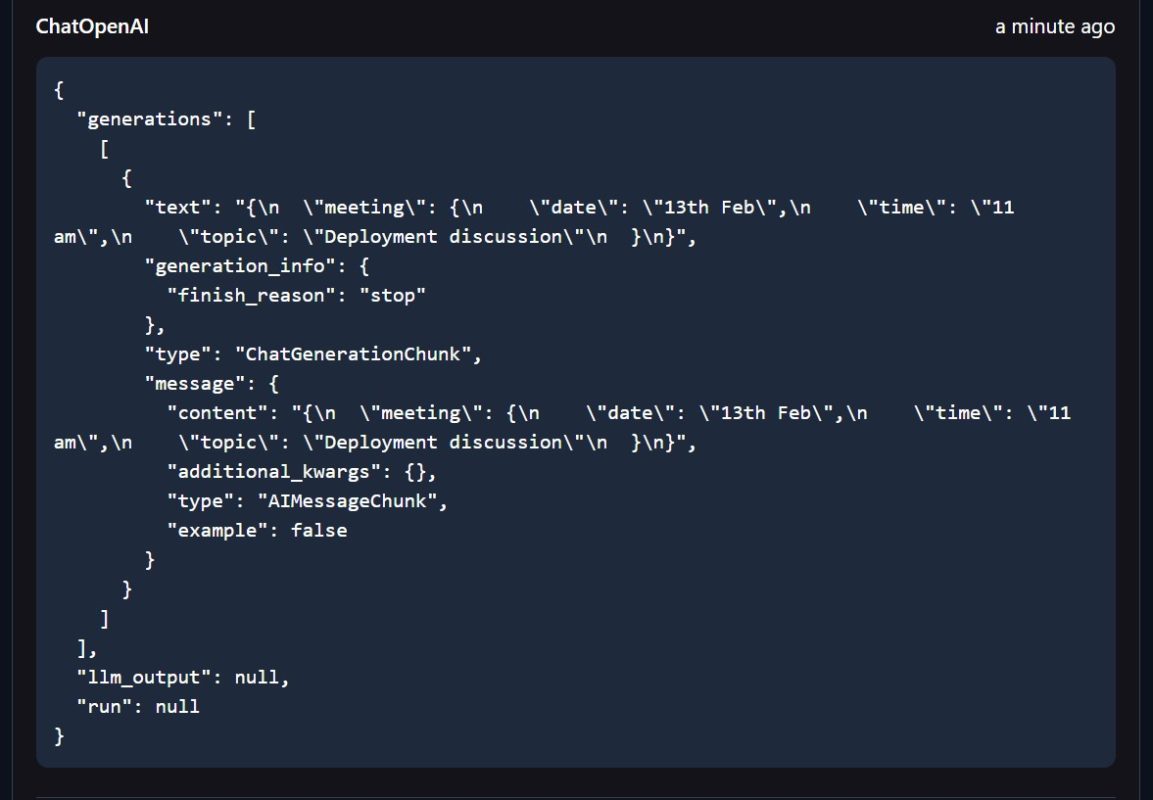

- Real-time Streaming Support: /stream_log/ endpoint makes the deployment of APIs irresistible by enabling real-time streaming of any or all of the intermediate steps from your chain or agent. It provides insights into how different steps are working in a deployed API.

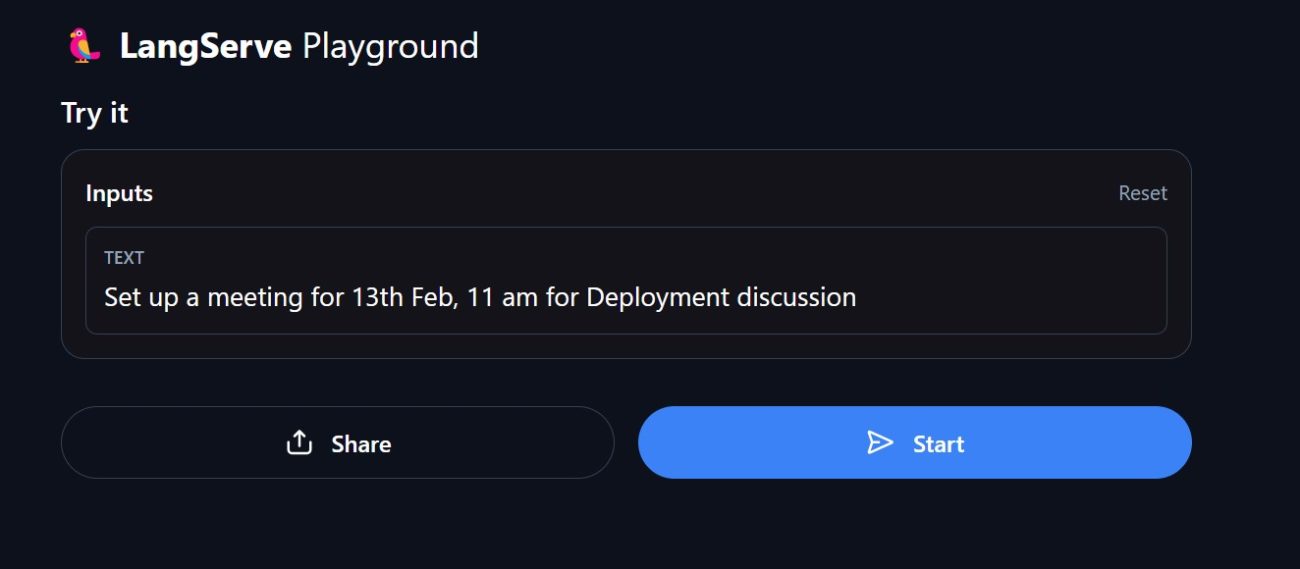

- Interactive Playground: The /playground/ page, is a developer friendly interactive area that allows streaming out and intermediate steps. It provides a convenient playground for testing and refining your deployed apis.

- Tracing back to LangSmith: LangServe by default comes with built-in tracing capabilities to your LangSmith. Just add your API key as the guideline in the instructions, and easily trace back your LangChain objects.

- Battle Tested Technologies: To ensure great robustness and steadfastness of the library, LangServe exploits battle-tested Python libraries sitting on top of strong open source technologies such as FastAPI, Pydantic, uvloop, asyncio, and friends for the actual handling of all heavy lifting involved.

- Seamless Integration of the Client SDK: The LangServe client SDK allows developers for calling a LangServe server as that of a Runnable running locally. Developers can optionally choose for calling the HTTP API directly for more fine-grained control on their deployments.

Now that we are introduced to the capabilities of Langserve, let’s try it out and see it in action.

Getting started

To get started, we will first install the required libraries:

LangChain CLI: the command line tool that comes with the commands to make it easy to get up and running with building langchain apps

pip install -U langchain-cli

Poetry for dependency management for large scale python applications

For Mac, you can use the below command

curl -sSL https://install.python-poetry.org | python3 –

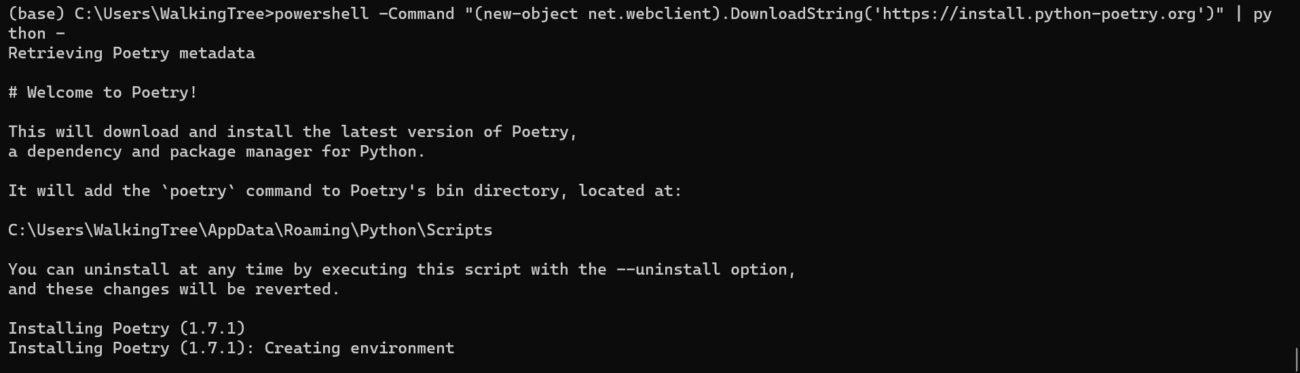

In your Windows application, you can use the following command to install poetry.

powershell -Command “(new-object net.webclient).DownloadString(‘https://install.python-poetry.org’)” | python –

The below screenshot shows the poetry installation completed.

To get started you need to add the Poetry’s bin directory (C:\Users\WalkingTree\AppData\Roaming\Python\Scripts) in your `PATH` environment variable.

Alternatively, you can call Poetry explicitly with the complete path for e.g. `C:\Users\WalkingTree\AppData\Roaming\Python\Scripts\poetry`.

You can test that everything is set up by executing:

`poetry –version`

Now you are ready to create your own application.

Create a new application

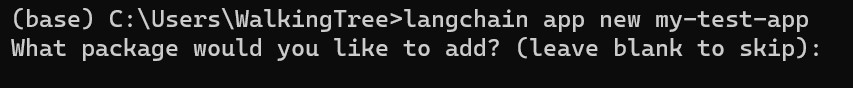

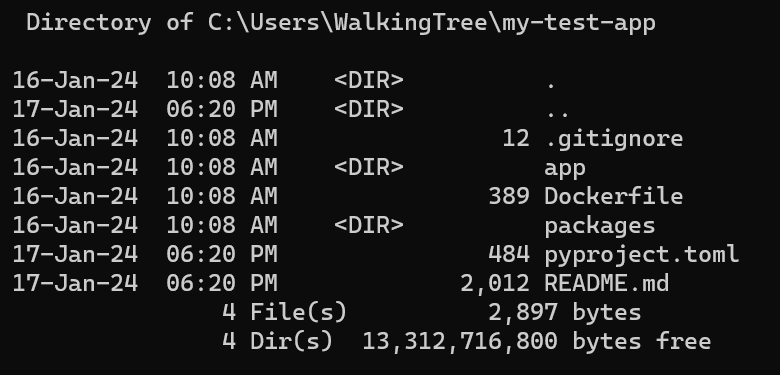

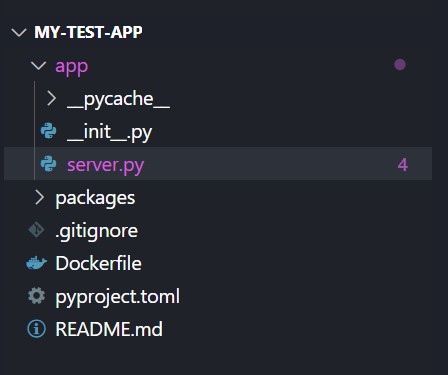

To create a new app called my-test-app, run:

langchain app new my-test-app

This creates a new folder(skip the question).

Set up a new chain

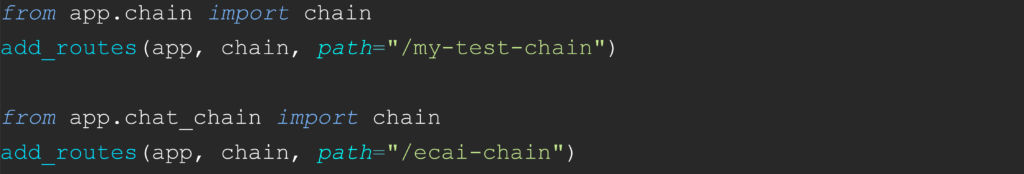

To create a simple app route, first, we will modify the server.py to include the route as below:

We can add as many routes as needed which will be hosted on the same app. For each chain, we will add the implementation logic as a separate py file defining the behavior.

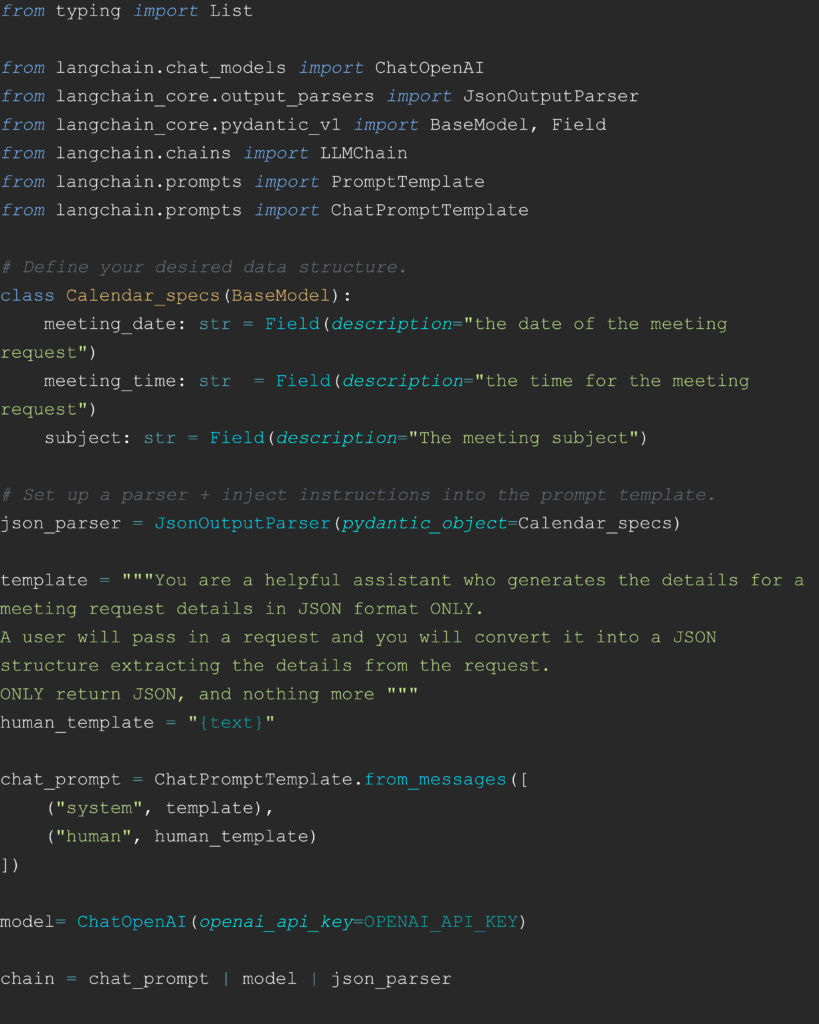

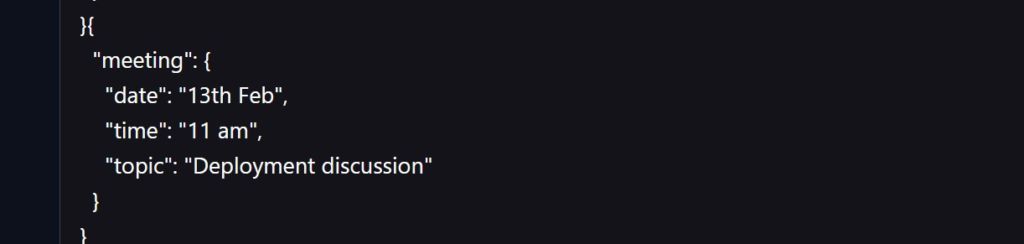

Below is the code added for a chain for converting the text into a JSON format for a meeting request which will be parsed using the JSON parser defined.

The above code shows the chain defined using LangChain Expression Language(LCEL) , combining the prompt template, the model and the output parser. This defines the behavior of the chain. Similarly we can define complex chains combining different types of components and tools as per the requirements and make them available through the endpoint api.

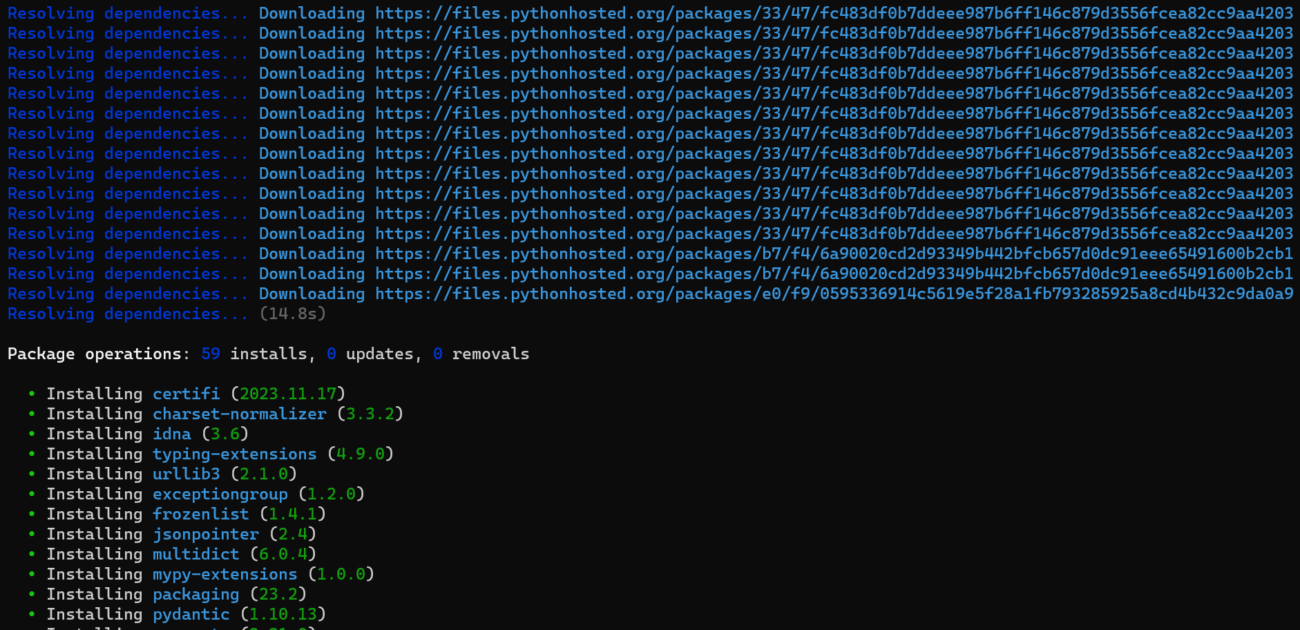

Adding Dependencies

Any dependencies needed for the chain should be included using poetry as shown below:

poetry add openai langchain-core

Run locally

You can now run your application locally using the below commands:

poetry install

poetry run langchain serve

You can test out the app locally in the playground using the URL http://localhost:8000/ecai-chain/playground/

You can see the streaming output along with intermediate steps.

You can also look at the API documentation as below:

Deploying using LangServe

Push the code to GitHub and now you are ready to deploy.

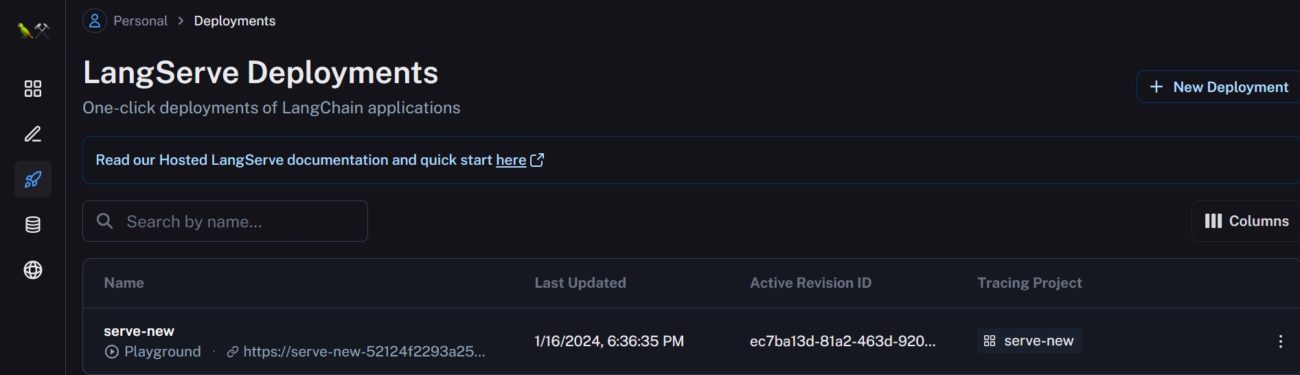

Go to smith.langchain.com and the rocket on the left menu takes you to LangServe.

Here you can view all your deployments and add a new deployment using the button on the top right, which opens up a form as shown below.

You can enable tracing using Langsmith by adding the params. You can include the required environment variables in this form( for e.g. OPENAI_API_KEY) and submit.

This takes some time after which your endpoint is ready for consumption.

You can follow the same and easily add your logic as different chains and deploy for it to be consumed by your web application code using the generated endpoints.

|Conclusion

In this blog, we introduced LangServe, its features, and the benefits that it brings to the space of API deployment for LLM-based applications using the LangChain ecosystem. Whether you’re a seasoned developer or an enthusiastic beginner to dabble through new horizons, LangServe will turn your LangChain runnable and chains into stable APIs ready to be toyed with and smoothly taken to production.