Fine-Tuning Open Source Language Models: Enhancing AI solutions for your unique needs

In the rapidly evolving landscape of artificial intelligence, the power to customize and optimize models for specific applications is more accessible than ever. Open-source language models, like those from Meta and Mistral, offer robust frameworks and pre-trained models that can be adapted to meet a variety of needs. However, the real magic happens when you fine-tune these models to suit your unique requirements.

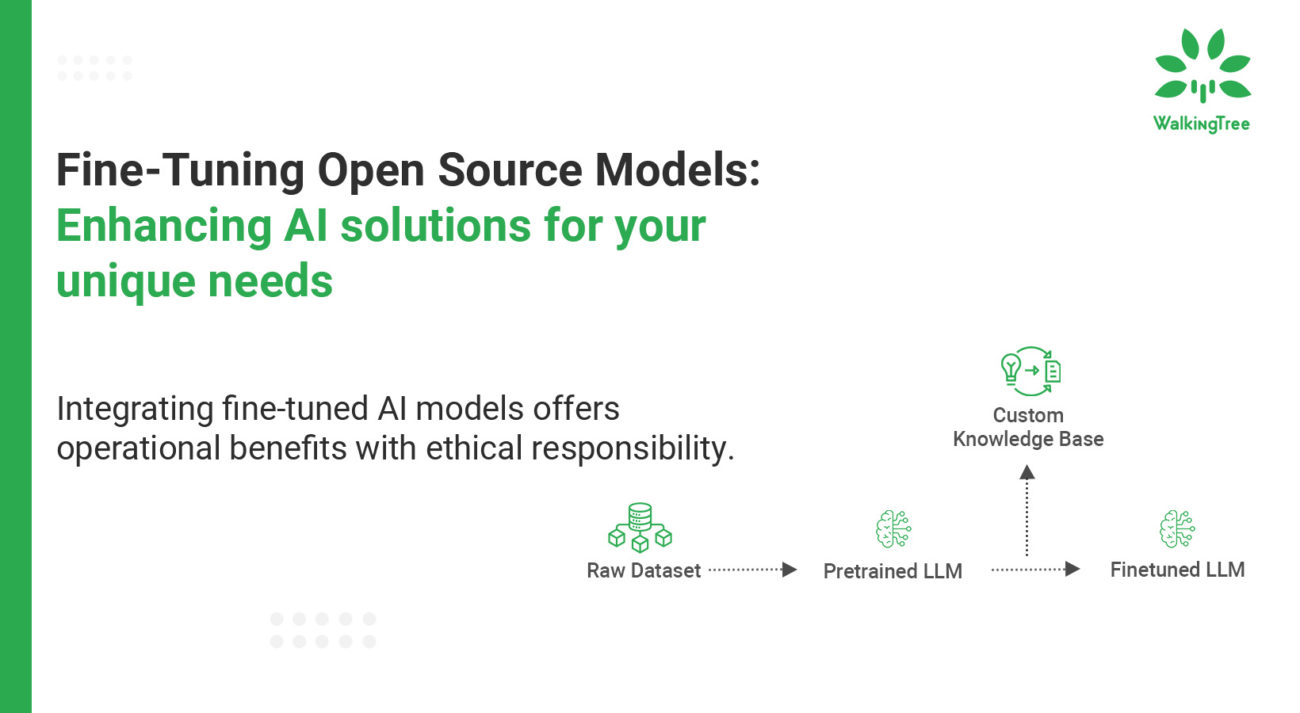

Fine-tuning is the process of taking a pre-trained language model and adjusting it to perform better on a specialized task or dataset. This approach not only saves time and resources but also leverages the vast amounts of data and computational power already invested in creating the original model.

Fine-tuning allows organizations to optimize the language model performance on particular tasks, ensuring that the AI solutions are not only powerful but also precisely tailored to specific operational needs.Fine-tuning tailors a pre-trained LLM (large language model) to a specific domain or task by adjusting its parameters using a smaller dataset. This approach aims to boost performance and efficiency, especially when you have a small, well-organized dataset similar to the original training data of the pre-trained model. However, if not done carefully, fine-tuning can lead to overfitting or catastrophic forgetting.

|Fine-Tuning

Adopting pre-trained open-source language models offers a significant advantage by reducing the time and resources needed for model development from scratch along with complete control and transparency over the model. However, to unlock their full potential in specialized applications—ranging from customer service to nuanced analytical tasks—fine-tuning can be very effective and increase the performance of the model multifold. This process involves adjusting the model’s parameters to align closely with the unique data and tasks of your business, thereby enhancing both the efficiency and accuracy of AI implementations.

Different types of fine-tuning can adapt an LLM to a specific domain or task:

Instruction Tuning:

- Provides precise control over the LLM.

- Uses structured instructions to guide the LLM’s behavior.

- Ideal for generating specific types of content or responses.

- No change to the model’s architecture or training objective.

Domain Adaptation:

- Develops expertise in a specific field.

- Updates parameters with domain-specific data.

- Useful for understanding specialized documents like legal or medical texts.

Task Adaptation:

- Adds a task-specific layer to the LLM.

- Updates parameters with task-specific data.

- Aims to improve performance and efficiency for specific tasks.

Parameter-efficient fine-tuning (PEFT) is a technique for adapting large language models (LLMs) to specific tasks or domains while minimizing changes to the model’s parameters. The main goal is to reduce the computational and memory costs associated with fine-tuning, making it more efficient.The key points about parameter-efficient fine-tuning:

- Selective Adjustment: Instead of updating all the parameters of the model, only a subset of parameters are adjusted. This reduces the computational load and the risk of overfitting.

- Efficiency: By focusing on a smaller number of parameters, PEFT reduces the resources required for fine-tuning, making it feasible to adapt very large models even on limited hardware.

- Techniques:

- Adapters: Small modules added between layers of the LLM that are specifically tuned for the new task while keeping the original model parameters mostly unchanged.

- Low-Rank Adaptation (LoRA): Decomposes weight matrices into low-rank components to reduce the number of parameters that need to be fine-tuned while the original model parameters are frozen.

- Prefix Tuning: Adds task-specific tokens (prefixes) to the input sequence, tuning only the embeddings of these prefixes.

Predibase team has developed an innovative type of LLM serving infrastructure designed for efficiently deploying numerous fine-tuned models using a shared set of GPU resources. This approach is called LoRA Exchange (LoRAX). Unlike traditional methods for serving large language models, LoRAX enables users to consolidate up to 100 fine-tuned, task-specific models onto a single GPU. For Serving 100s of fine tuned adaptors for various tasks, the Lorax method stands out by allowing minimal yet strategic modifications to model parameters. This approach significantly reduces computational costs and shortens the training time, essential for swiftly deploying AI solutions across various business functions without incurring prohibitive expenses.

You can read more about the internals of LoRAX here.

|A guide to Fine-Tuning your AI model

In this blog, we show the main steps to fine-tune 4-bit quantized mistral-7b open-source LLM. Predibase and Unsloth provide fine-tuning notebooks links which are shared at the end of the blog and can be used for the fine-tuning process.

Consider the following critical steps:

- Data Preparation: Proper data preparation is the foundation of successful fine-tuning. In most cases, you may decide to make use of a higher model to generate the tuning data set, assuming the higher model gives better results for your use case.

- Load the Model: If we are going to use the Mistral 7B model, we would have to specify the specifications of how we load it and load from Hugging Face’s transformers library with the quantization configuration provided as parameters.

- model_checkpoint = ‘mistralai/Mistral-7B-v0.1’

- Load the model it in 4 bits, using NF4 (Normalized Float 4), introduced in the QLoRA paper, which tends to provide better performance than FP4.

- Enable double quantization by setting bnb_4bit_use_double_quant to True, which saves 0.4 bits per parameter.

- Set bnb_4bit_compute_dtype to torch.bfloat16, meaning that matrix multiplications occur in 16-bit dtype instead of the default 32-bit torch.bfloat32.

- Model Adjustment and training: Selecting which Lora configuration and Lora layers to fine-tune based on their potential impact on your specific tasks is crucial. Below is a snippet demonstrating the setup and initiation of model training using the Hugging Face Transformers library:

from transformers import AutoModelForSequenceClassification, Trainer, TrainingArguments

target_lora_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",]

model = AutoModelForSequenceClassification.from_pretrained('bert-base-uncased')

training_args = TrainingArguments(

output_dir='./results', num_train_epochs=3, per_device_train_batch_size=16,

warmup_steps=500, weight_decay=0.01, logging_dir='./logs', logging_steps=10,

)

trainer = Trainer(

model=model, args=training_args, train_dataset=train_dataset, eval_dataset=eval_dataset

)

trainer.train()

- Evaluation and Testing: After training, it’s essential to evaluate the model against a separate validation dataset to ensure it performs as expected. The following code snippet provides a method to compute key performance metrics:

from sklearn.metrics import accuracy_score, precision_recall_fscore_support

def compute_metrics(pred):

labels = pred.label_ids

preds = pred.predictions.argmax(-1)

precision, recall, f1, _ = precision_recall_fscore_support(labels, preds, average='binary')

acc = accuracy_score(labels, preds)

return {'accuracy': acc, 'f1': f1, 'precision': precision, 'recall': recall}

results = trainer.evaluate()

print(results)

Following the above main steps as shown later in the notebook, you can fine-tune the model as per your specific requirements to bring in a further layer of control and predictability to your results. In our next technical blog, we will cover detailed steps of how we fine-tuned Mistral 7B base model for Named Entity Recognition(NER) using Predibase.

|Challenges and solutions

| Challenge | Description | Solution |

| Data Diversity and Representation | Inadequate data diversity can lead to biased or underperforming models that fail to handle edge cases effectively. | Gather and curate diverse datasets that reflect varied demographics and scenarios relevant to operations. Use data augmentation to enhance the richness of datasets. |

| Overfitting | Overfitting occurs when a model is too closely fitted to the training data, affecting its performance on unseen data. | Implement regularization techniques such as dropout, early stopping, or L2 regularization penalties. Use a validation set to monitor and prevent overfitting. |

| Computational Resources | Fine-tuning large models requires significant computational power and time, which can be a barrier for some organizations. | Leverage cloud computing resources for scalable AI training capabilities. Use transfer learning to fine-tune only a portion of the model, reducing computational demands. |

| Model Generalization | Ensuring that a fine-tuned model generalizes well to new, unseen datasets is critical for its practical application. | Employ cross-validation techniques to validate the training process across multiple data subsets, ensuring robust model generalization. |

| Alignment with Business Objectives | There can be a disconnect between model performance metrics and actual business objectives. | Define clear, quantifiable metrics that align closely with business goals. Involve domain experts to ensure the model’s outputs are relevant and valuable to the business. |

| Ethical Considerations and Bias | Models can inadvertently learn and perpetuate biases present in the training data. | Implement rigorous bias detection and mitigation strategies during data preparation and model training. Conduct regular audits and updates to AI systems to identify and address biases. |

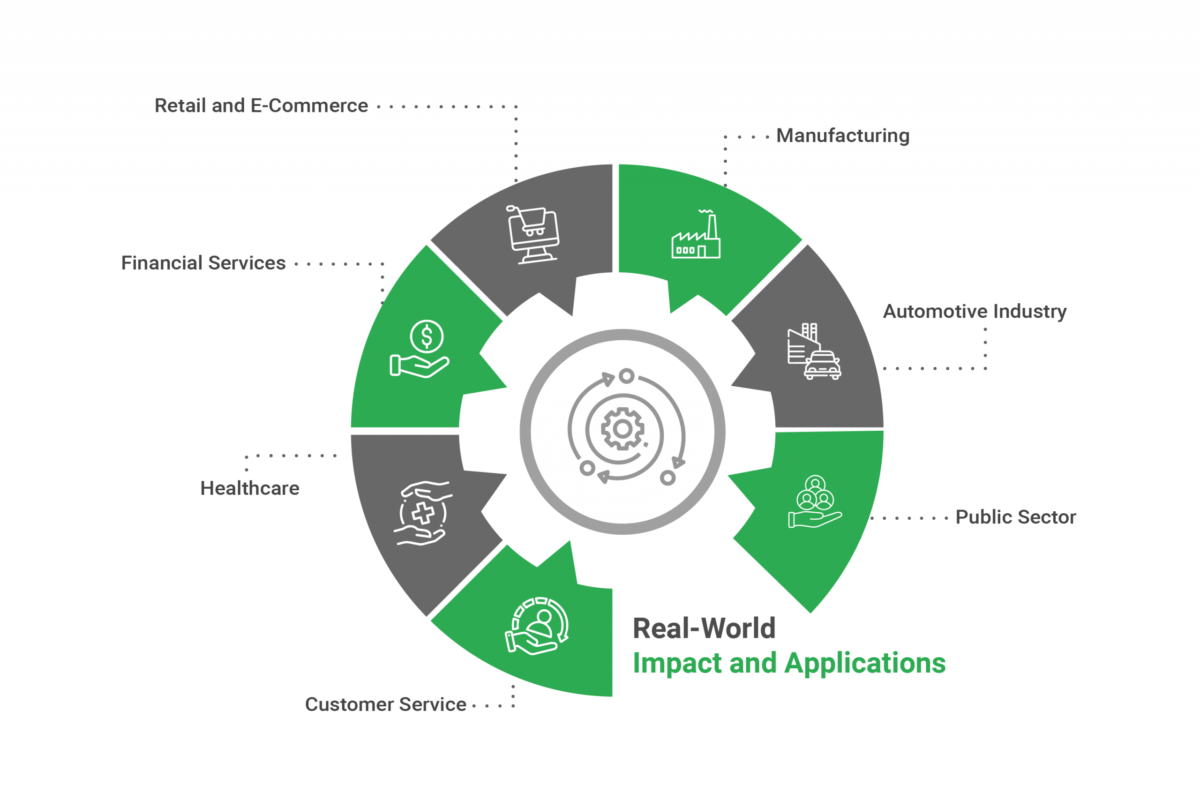

|Real-World Impact and Applications of Fine-Tuned AI Models

The practical implications of fine-tuned AI models are extensive and transformative across various sectors. By refining these models to align with specific operational needs, organizations can leverage AI not just as a tool for automation, but as a strategic asset for enhancing decision-making, improving customer relationships, and driving innovation. Here are some key areas where fine-tuned AI models are making a significant impact:

Customer service:

- Personalized interactions: AI can analyze customer data and previous interactions to tailor responses and recommendations, enhancing customer satisfaction.

- Automated support: Fine-tuned models can handle routine inquiries and support tickets, freeing human agents to tackle more complex issues, thus improving operational efficiency.

Healthcare:

- Diagnostic accuracy: AI models fine-tuned on specific medical data can help diagnose diseases more accurately and suggest personalized treatment plans.

- Patient monitoring: AI can continuously analyze patient data in real-time, providing alerts for any abnormalities that require immediate attention, thus preventing complications.

Financial services:

- Fraud detection: Models trained on transaction data can learn to spot patterns indicative of fraudulent activity, thereby enhancing the security of financial transactions.

- Credit scoring: AI can assess credit risk more accurately by considering a wider range of factors, leading to more informed lending decisions.

Retail and e-commerce:

- Demand forecasting: Fine-tuned AI models can predict future product demands based on historical sales data and market trends, optimizing inventory management.

- Customer segmentation: AI can segment customers based on purchasing behavior and preferences, enabling more targeted marketing strategies.

Manufacturing:

- Predictive maintenance: AI models can predict equipment failures before they occur, minimizing downtime and maintenance costs.

- Quality control: Machine learning models can identify manufacturing defects with high precision, ensuring product quality.

Automotive industry:

- Autonomous driving: Fine-tuning AI models on specific driving scenarios and conditions can enhance the safety and reliability of autonomous vehicles.

- Supply chain optimization: AI can optimize logistics and supply chain operations by predicting delays and calculating optimal routes.

Public sector:

- Resource allocation: Governments can use AI to predict public service demands and optimize the allocation of resources accordingly.

- Public safety: Fine-tuned models can assist in crime prediction and prevention by analyzing patterns from past incidents.

As we look to the future, the integration of fine-tuned AI models into business strategies not only promises substantial operational benefits but also poses a responsibility to deploy these powerful tools thoughtfully and ethically. For leaders in technology and business, the journey of implementing and refining AI is continuous, driven by the ever-evolving landscape of data, technology, and societal needs.