LangSmith – Navigating the Road to Production for LLM apps

As we continue to delve into the boundless potential of Large Language Models (LLMs), a pivotal question looms ever larger on the horizon:

As we continue to delve into the boundless potential of Large Language Models (LLMs), a pivotal question looms ever larger on the horizon:

- How can we effectively harness this power to propel these applications into production?

- With each groundbreaking advancement in language processing, we find ourselves grappling with the essential inquiry: what is the optimal approach to applying LLMs?

- Are we treading the right path to unleash their full capabilities?

- How can we evaluate our solutions?

These contemplations reflect the dynamic landscape of LLM technology, urging us to seek the most fitting solutions as we explore uncharted territory in the realm of language-driven applications. In this pursuit, the quest for an ideal strategy becomes the linchpin of innovation and advancement, guiding us toward a future where LLMs elevate human-machine interaction to unparalleled heights.

In the ever-evolving landscape of Large Language Models (LLMs), building production-grade applications demands a platform that can provide robust debugging, seamless integration, and reliable monitoring. LangSmith, a cutting-edge platform developed by LangChain, emerges as the ultimate solution to cater to these critical needs. In this blog series, we’ll delve into the capabilities of LangSmith, exploring how it enables developers to create, debug, test, evaluate, and monitor LLM applications with unparalleled efficiency and effectiveness.

A Comprehensive Platform for LLM Applications

LangSmith is a revolutionary platform that empowers developers to harness the full potential of Language Models. By offering a comprehensive set of tools, it simplifies the process of building, deploying, and managing LLM applications across various frameworks.

Unraveling the Debugging Abilities

Debugging is a vital aspect of any software development process, and LLM applications are no exception. LangSmith’s intuitive debugging features enable developers to identify and resolve issues swiftly, significantly reducing development cycles and optimizing application performance.

Testing with Confidence

Thorough testing is paramount when it comes to LLM applications, given their intricate nature. LangSmith’s powerful testing suite equips developers with the means to execute comprehensive tests, ensuring the application’s reliability and robustness.

Precise Evaluation for Enhanced Performance

Accurate evaluation is crucial to understand the strengths and weaknesses of an LLM application. LangSmith provides advanced evaluation tools that enable developers to fine-tune their models, resulting in optimized and high-performing applications.

Seamless Integration with LangChain

LangSmith’s seamless integration with LangChain, the premier open-source framework for building with LLMs, unlocks a world of possibilities for developers. The synergy between these two technologies enhances the overall development experience and unleashes the full potential of LLM applications.

Real-time Monitoring for Uninterrupted Performance

Monitoring LLM applications in real-time is essential for maintaining their performance and reliability. With LangSmith’s monitoring capabilities, developers can proactively detect anomalies and take corrective actions, ensuring a smooth and uninterrupted user experience.

NOTE: LangSmith is currently in closed beta and the team is slowly rolling access to more users. You can join the waitlist and they will get back to you once they roll out more invites.

With all these benefits at hand, let’s look at how this can be included in your application.

With the below commands setting up the environment, you are ready to start visualizing and debugging your chains and prompts.

Once you have access, you can add a new project in the LangSmith application and use the same in the commands below to setup tracing for your application environment.

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_ENDPOINT=https://api.smith.langchain.com

export LANGCHAIN_API_KEY=<your-api-key>

export LANGCHAIN_PROJECT=<your-project> # if not specified, defaults to “default”

You are all set!

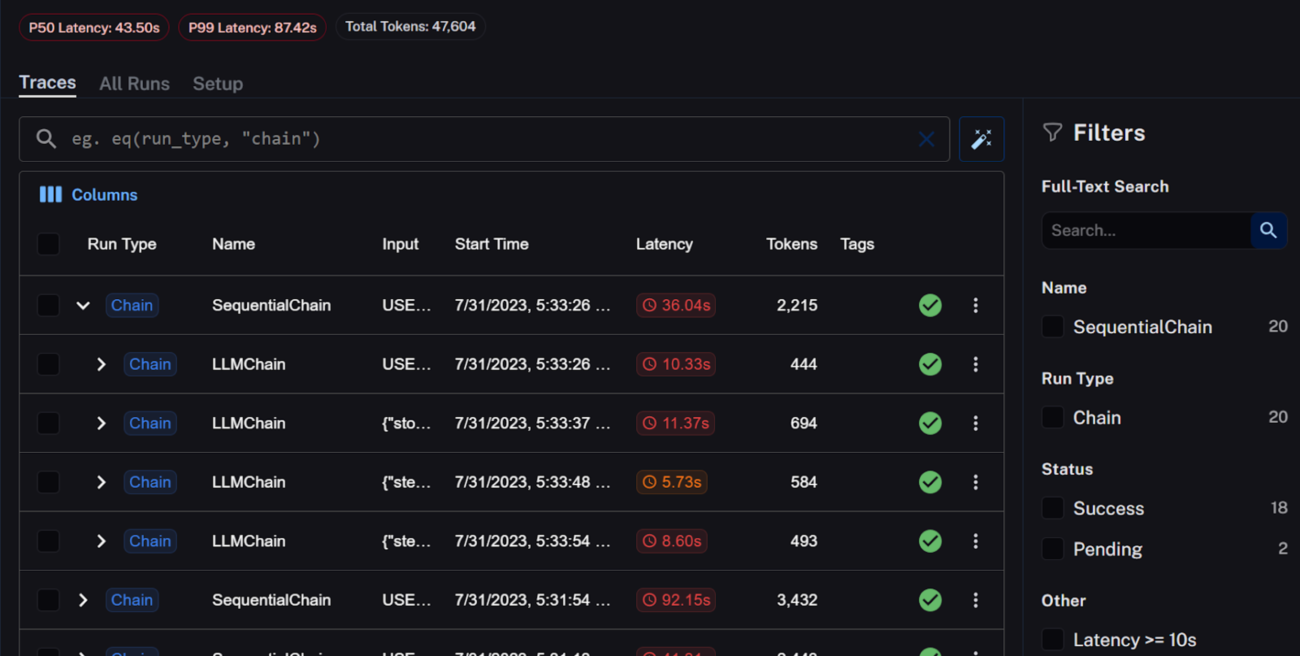

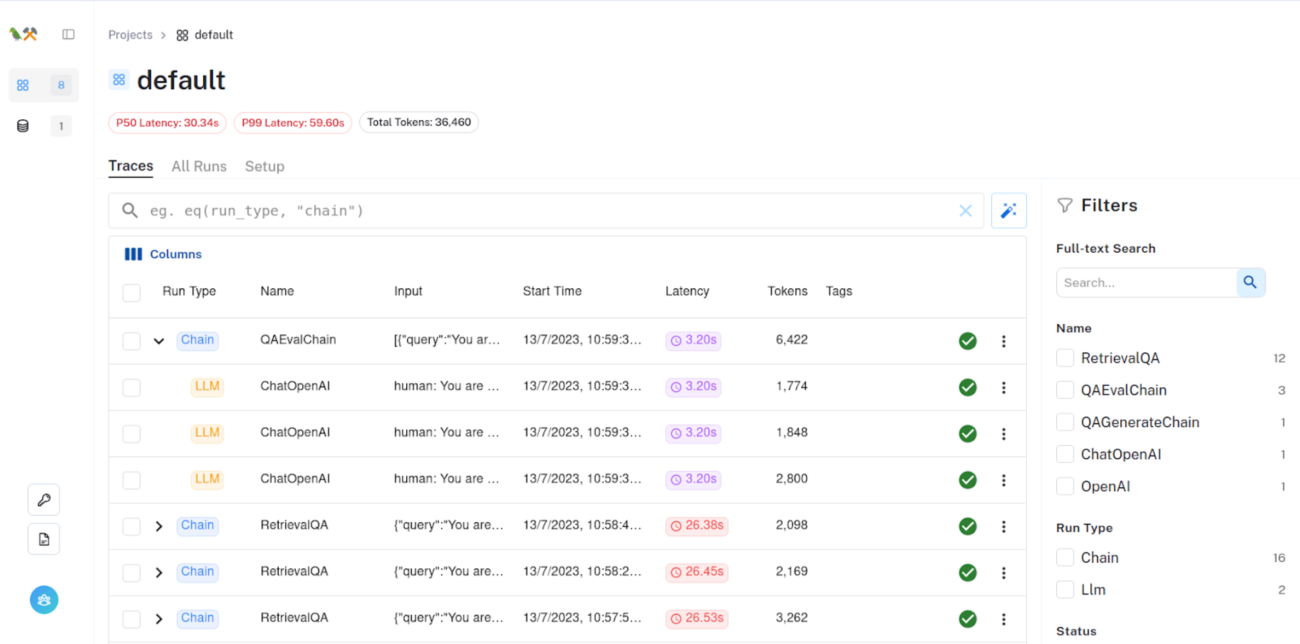

You can go into the application and see the details of the run. Below is the snapshot showing the different chains in action:

With no extra code, you can view all your langchain integrations. You can see the various call details for any type of Chains or Agents you might be using including token consumption, latency,

With no extra code, you can view all your langchain integrations. You can see the various call details for any type of Chains or Agents you might be using including token consumption, latency,

To take it further for testing and evaluation, you have an option to include the runs in a consolidated dataset so you can run the eval chain on that.

You can select a specific node and view details of input/output and other related metadata. You also have the option to share the runs using a shareable url.

This gives you complete visibility of your Language Model execution. You also have a light mode.

Once you have created a dataset, you can further provide expected values and compare against the model response and get an evaluation score too. We will look at how we can use langsmith for evaluation in the next blog.

Conclusion

With its comprehensive debugging, testing, evaluating, and monitoring capabilities, LangSmith represents a groundbreaking platform for developing production-grade LLM applications. Seamlessly integrated with LangChain, LangSmith empowers developers to embark on a journey of creativity and efficiency as they build intelligent agents and chains that push the boundaries of what LLM applications can achieve. As the future unfolds, LangSmith remains at the forefront of advancing LLM technology, driving innovation, and transforming how we interact with language-driven applications. There is a lot of action in store for sure!