LangChain – Unleashing the full potential of LLMs

The entire world is talking about ChatGPT and how Large Language Models(LLMs) have become so powerful and has been performing beyond expectations, giving human-like conversations. This is just the beginning of how this can be applied to every enterprise and every domain!

The most important question that remains is how to apply this power to domain-specific data and scenario-specific response behavior suitable to the needs of the enterprise.

LangChain provides a structured and effective answer to this problem at hand! LangChain is the technology that can help realize the immense potential of the LLMs to build astounding applications by providing a layer of abstraction around the LLMs and making the use of LLMs easy and effective.

In this first blog on this topic, I will talk about what can be done with LangChain and what are the capabilities it provides. In future blogs, we will look at some specific use cases and applications of the different LangChain modules.

LangChain is a great framework that can be used for developing applications powered by LLMs.

When you intend to enhance your application using LLMs, it will be required to interact with your data which could comprise of the following:

- Your knowledge artifacts sitting in some file folder or cloud repositories

- Your data sitting in your database

- Live data from the internet

- External systems are exposed through APIs and much more.

LangChain provides the capability to be able to interact with and query the data from different sources and use it effectively with LLMs. There are different modules in LangChain that provide different capabilities and are applicable to different use cases. We will explore each one to understand its purpose.

|Models

Langchain can work with different models and provides a single standardized interface to use different LLM providers like OpenAI, Cohere, Hugging Face, etc. You can also create a custom wrapper if you want to use an LLM of your own which is not supported by default. This provides additional capabilities to be able to cache your LLM calls,

The 3 types of models supported and used:

- LLMs – Large Language Models (LLMs) take a text string as input, and return a text string as output and can be used for any text generation requirements like summarization, classification, etc..

- Chat Models – Chat Models work on top of an LLM, but their APIs are more structured and they are specifically trained for Conversational behavior taking a list of Chat Messages as input, and returning a Chat Message.

- Text Embedding Models – These models take text as input and convert into text embeddings returning a list of floats. You can use a variety of models for generating the text embeddings ranging from OpenAI,Hugging Face Hub or a self hosted HuggingFace model on the cloud of your choice.

Using the different models as necessary for your application requirement, you can use Langchain with the required model and make use of its capabilities. Read more.

|Prompts

Prompt Engineering is the new programming!

Another important aspect of using LLMs is providing and building the right prompts to satisfy your requirements. You may be using the input and building an appropriate prompt based on what you want the LLM to perform for you to provide the right set of instructions and also be able to maintain and manage the context.

When using prompts in your application, you can make use of prompt templates to give it a proper structure and define and use different custom templates as per your requirements.

The prompts will mainly include:

- Instructions to the language model,

- A set of a few shot examples to help the language model generate a better response,

- A question to the language model.

You can also define Example selectors if you have a large number of examples, and add some logic to select which ones to include in the prompt. The ExampleSelector is the class that can be used for this purpose.

This provides a structured approach to providing different prompts and few-shot examples to your LLMs.

|Indexes

The next important module adds extra power to the LLMs by providing the capability to work with documents and different types of sources in the most efficient manner.

The capability of Question answering over documents which allows you to use your domain-specific documents. The large knowledge base your enterprise holds but is not really accessible or useful in any way, can be now put to use by connecting this to LLMs using this capability.

The following steps are used to be able to perform Question answering over documents :

- Load the data using Document Loaders. Langchain supports a huge list of document loaders as listed here. From PDFs to Google Drive to S3 and blob storage, it has a vast coverage from where you can load the data and enable question answering on them.

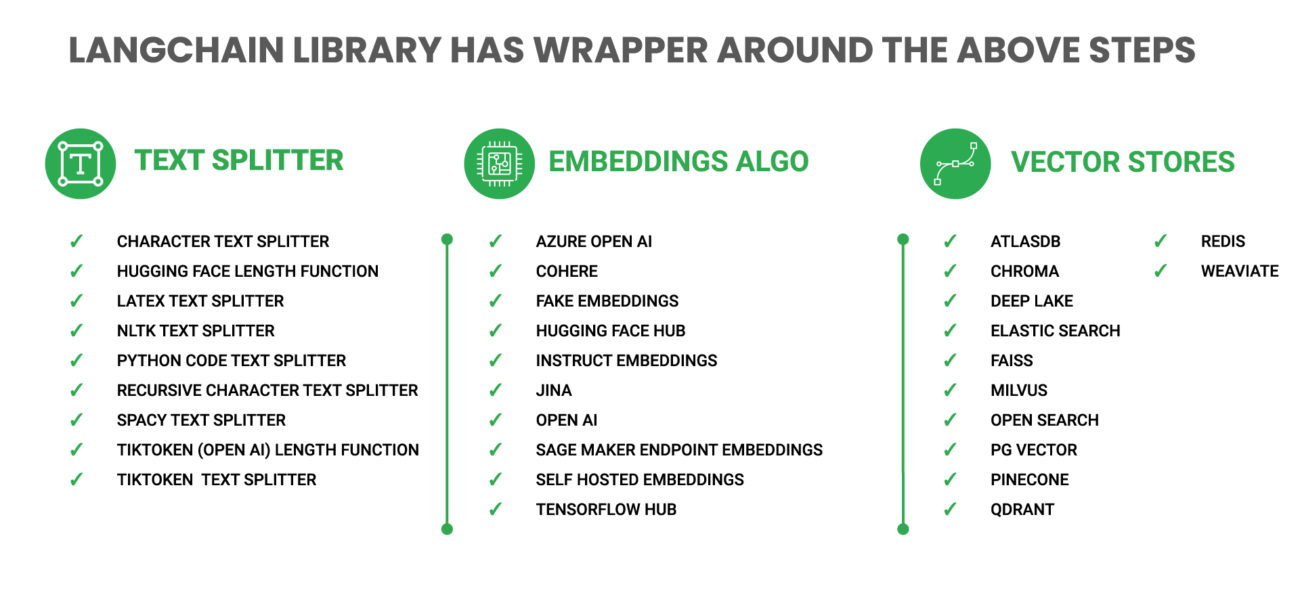

- Optimize the data for effective token usage by splitting it into smaller useful and semantically related chunks using Text Splitter. (Find the complete list here.)

- The next step is to generate the embeddings using the suitable embedding model and be able to store the documents and associated embeddings using a vector store which provides fast ways to look up relevant Documents using embeddings. Langchain supports the popular vector stores as listed here.

- Create a Retriever from the index to fetch the relevant documents needed to get the answer to the question. There are retrievers supported corresponding to the Vectorstores as listed here. You already see the ChatGPT Plugin Retriever while many of us are still on the waitlist to use the ChatGPT plugins.

- Create a question answering Chain that takes user input and passes through multiple components together to create a single, coherent application giving the final response.

With the above steps in place, You are ready to

- Ask your questions and get your answers!

The Langchain Library provides an overall wrapper for the different operations to interact with different LLMs to build enterprise-grade applications. The library facilitates the three main steps needed to use any LLM effectively and efficiently:

- TextSplitter

- Embeddings

- Vector Stores

|Memory

The concept of Memory as the name suggests is useful to maintain the context by keeping track of previous interactions and enabling us to use them as needed for future interactions. Here again, LangChain supports an impressive list of memory types that can be applied to different use cases be it a Chat application, Agents, or memory backed by databases along with capabilities like multi-input, and auto-incremental summarization of past interactions.

|Chains

Chains are a sequence of predetermined steps, so they give a good starting point with greater control and also with the understanding of what is happening better. The use of chains enables us to merge several components into one cohesive application. For instance, we can create a chain that acquires user input, uses a PromptTemplate to format it, and then delivers the formatted response to an LLM. We can form even more intricate chains by combining multiple chains, or by integrating chains with other components. In my next blog, I have covered the use of SQLDatabaseChain for getting data from SQL DB using natural language queries, which also makes use of PromptTemplates.

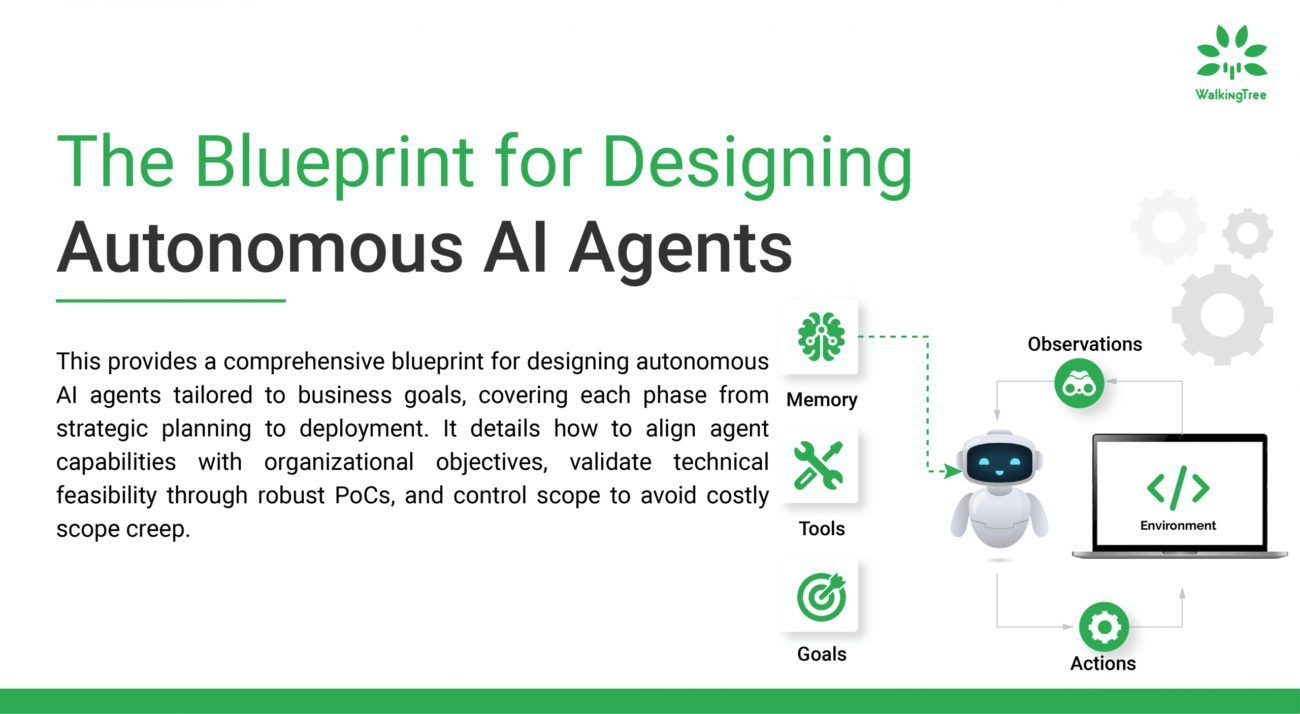

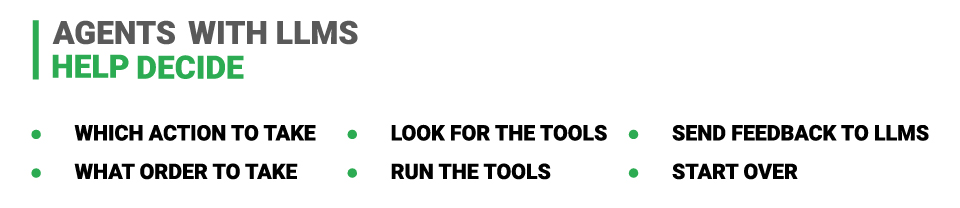

|Agents

Agents, another powerful capability provided by LangChain which has access to a suite of tools and can be used to decide which tool to use depending on the user input. There is a whole list of agents and tools which can be used based on your requirements along with the capability to create custom agents. In the background, the agents are specialized prompts to direct the LLM to behave in a specific manner. The most commonly used one being the agent optimized for conversation, using ChatModels. Other agents are often optimized to be able to use tools to decide the next best action and response.

|Evaluation

|Evaluation

Another important aspect that LangChain is venturing as part of its ecosystem is Evaluation. In any kind of AI problem, it is essential to have a mechanism to measure and gauge how the model has been performing so as to make any improvements if needed.

The same applied to a Language model through the process of evaluation is not straightforward due to the variety of problems at hand. Langchain provides a starting point for anyone wanting to set up an evaluation system for their use case.

There are certain example datasets (contributed by LangChain and the community) that can be used for the evaluation. This can be a reference to start with your own.

Once you have the data, you can perform the prediction and then follow the proposed 2 step evaluation mechanism.

Step 1– Manual verification by looking at the quality of responses to get an idea of how the model is performing.

Step 2 – Evaluation by comparison with the expected responses as provided in the datasets.

Langchain provides some examples of common use cases with some chains and prompts to achieve this.

With the evaluation process in place, you have all the layers and processes needed to build your application using LLMs.

|Conclusion

With such a wide variety of powerful options provided by LangChain, selecting the right tools at the different steps of your application will help you achieve the best results with the LLMs backed by your data. You can finally make good use of your history as well as live data as a real knowledge enabler for your enterprise.

Do connect with us to know more about our offerings and how we can help you on this journey.

P.S.: Check out this guide if you’re looking for a non-technical background of LangChain!