Blogs

The New Era of AI-Driven Workflows: Confidence-Aware Automation

Artificial intelligence-based systems are revolutionizing business operations—everything from automating processes to enhancing customer interactions. With the development of large language models (LLMs) and vision-language models (VLMs), organizations now can make decision-making easier, enhance processes, and boost efficiency.

But one core challenge persists: AI systems don’t always know when they are uncertain.

What Happens When AI Is Overconfident?

Consider these real-world AI failures:

- A contract analysis tool misinterprets a clause of law but puts forward its analysis as being 100% accurate.

- A financial assistant inaccurately classifies a transaction and thus causes errors in accounting.

- A customer service chatbot gives incorrect answers in full confidence.

These errors lower trust, generate compliance risks, and decrease the efficiency of AI-powered automation.

Bridging the Gap: Confidence Estimation in AI

Recent papers, such as Uncertainty in Action: Confidence Elicitation in Embodied Agents by Yu et al. (2025), highlights a critical solution: confidence estimation in AI-driven decision-making.

By allowing AI systems to judge and communicate uncertainty, businesses are able to:

- Increase accuracy and reliability of decisions

- Optimize operational savings through smart automation

- Lower compliance risks by sending low-confidence cases for human review

Aside from confidence estimation, strategic caching has the power to greatly streamline AI workflows. Storing and reusing results of high confidence can help save companies from repetitive AI computations at no cost loss.

Let’s explore how confidence estimation and caching strategies complement each other to deliver more intelligent, efficient, and trustworthy AI workflows.

| The Challenge: Overconfidence & Inefficiency in Enterprise AI

AI systems usually produce answers without an intrinsic mechanism to determine how confident they are. This may create significant problems if not controlled or monitored properly:

Business Workflow Errors

- A contract analysis AI may misread a clause but give its answer as fact, causing compliance risks.

Inefficient Use of AI

- If AI recalculates the same insights over and over again rather than using previous validated results, operational expenses explode.

No Reusability

- AI insights are frequently not cached or reused effectively, leading to unnecessary processing.

Example: AI in Financial Automation

A financial AI assistant checking invoices might keep repeating similar transactions analysis, rather than taking advantage of previously confirmed results. Without caching and confidence estimation, AI models are both unreliable and costly to run.

| Step 1: Confidence Estimation for Smarter AI Decision-Making

For AI to function well in complex workflows, it needs to identify various forms of uncertainty prior to decision-making.

Types of Uncertainty in AI-driven Workflows

1. Pattern Recognition (Inductive Reasoning) – The AI generalizes from past experience.

- Example: “Most previous invoices from this vendor had late fees, so this one probably does too. Confidence: 80%.”

2. Logical Inference (Deductive Reasoning) – The AI uses known rules but does not have full information.

- Example: “This contract clause looks like typical NDAs, but I am only 65% confident that it contains a non-compete clause.”

3. Best-Guess Explanations (Abductive Reasoning) – The AI makes an educated guess when the information is incomplete.

- Example: “This customer inquiry appears to be a refund request, but perhaps also regarding pricing. Confidence: 50%.“

Enterprise Use Cases:

- Contract Review AI: Rather than automatically accepting clauses, AI brings confidence scores to attention and alerts uncertain portions for human checking.

- Financial Automation: AI classifies expenses but holds uncertain ones for finance staff to decide upon.

- Customer Support Chatbots: AI returns confidence-scored responses, minimizing incorrect answers while bumping low-confidence cases up.

| Step 2: Refining AI Decisions with Execution Policies

After AI estimates confidence, it can improve its response before making a final decision. Yu et al. (2025) suggest that this process can be enhanced by three main execution policies:

Key Execution Policies for AI Decision-Making

- Action Sampling – AI generates several possible responses and chooses the one with the highest confidence.

- Scenario Reinterpretation – AI re-examines the same input from a different viewpoint to check for accuracy.

- Hypothetical Reasoning – AI explores other possible explanations and modifies confidence levels accordingly.

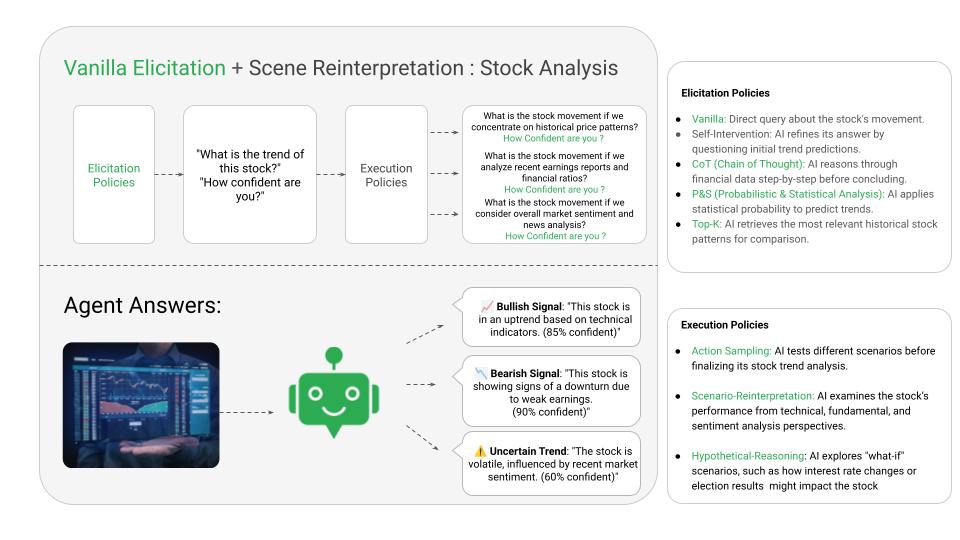

Example: AI-Powered Stock Analysis with Confidence Estimation

WalkingTree’s Stock Analyser helps retail traders analyze and act on market insights with confidence-aware intelligence. Using natural language inputs, the AI generates Python trading strategies, processes real-time and historical stock data, and determines its confidence in trend predictions—before execution.

Elicitation Policies: AI processes natural language trading queries, generates corresponding strategy code, fetches historical and real-time stock data, and determines the confidence level of predicted market movements.

(e.g., “What is the trend of this stock?”)

Execution Policies:

AI narrows its prediction by analyzing alternative data views:

- If we concentrate on historical price patterns?

- If we analyze recent earnings and news sentiment?

- If we compare with similar stocks or sector indices?

Agent Responses:

Stock 1: “This stock is in an uptrend based on technical indicators.” (85% confident)

Stock 2: “This stock is showing signs of a downtrend due to weak earnings.” (90% confident)

Stock 3: “The trend is volatile, influenced by recent market sentiment.” (60% confident)

Through the combination of confidence estimation and scenario reinterpretation, traders can better trust AI-generated insights, take action on high-confidence signals, and escalate uncertain situations for manual review or deeper analysis.

| Step 3: Maximizing AI with Caching & Reusing High-Confidence Outputs

While AI can produce meaningful insights, businesses often fail to capture and reuse them successfully. Adopting a caching strategy enables businesses to optimize AI efficiency while cutting down on pointless computational expenses.

How Caching Revolutionizes Enterprise AI

Storing High-Confidence Results for Reuse

- AI-summarized or classified results that have a confidence level of over 90% are cached for future use.

Retrieving Instead of Recomputing

- Rather than asking an AI model multiple times for the same data, cached results offer immediate answers where possible.

Minimizing Duplicate AI Queries

- AI-based search utilities retrieve pre-stored answers to common questions rather than redoing them every time.

Enterprise Applications of AI Caching

- HR & Compliance: AI auditing employee policies stores validated summaries to avoid duplicate queries.

- E-Commerce Chatbots: Stores high-confidence answers to refund policy inquiries.

- Fraud Detection: Caching past patterns of fraud makes it unnecessary to reanalyze past transactions from the previously detected accounts.

Organizations can drastically minimize AI processing expenditures without slowing down fast and assured automation through implementing caching.

| Beyond Caching: Additional Tactics for Optimizating AI

For further optimizations of AI efficiency, organizations can apply more strategies:

1. Intelligent AI Routing: Using the Right Model for the Right Task

- Simple queries → Caching existing answers or rule-based models

- Moderate complexity → Fine-tuned, smaller AI models

- Complex reasoning → Full-scale LLMs (GPT-4, Claude, Gemini, etc.)

2. Confidence Thresholding: Balancing AI Automation & Human Oversight

- Auto-approve high-confidence output (>90%)

- Flag medium-confidence output (60-90%) for review

- Reject or escalate low-confidence cases (<60%)

3. Context-Aware Memory: Making AI Smarter Over Time

- Save past conversations to build more natural conversations.

- Utilize vector databases (e.g., Supabase, Pinecone, FAISS) for improved recall

- Use Retrieval-Augmented Generation (RAG) to retrieve relevant knowledge prior to responding.

4. Optimized Prompt Engineering: Cutting AI Costs Without Compromising Accuracy

- Trim unnecessary context from AI prompts to cut down on computational burden.

- Employ pre-constructed templates for common questions.

- Use few-shot prompting and context based prompting sparingly.

5. Human-AI Collaboration: Balancing Automation with Expert Supervision

- AI can provide initial analysis, and humans can examine flagged cases.

- Employ feedback loops to improve AI models over time.

- Make AI decisions explainable and auditable to ensure trust.

6. Federated Learning: AI Training Without Sacrificing Privacy

- Healthcare AI processes patient data from various hospitals without breaching HIPAA guidelines.

- Financial AI learning schemes from various banks without revealing customer information.

7. Parallel AI Processing: Speeding Up Enterprise AI Workflows

- Document AI can review multiple contracts at once, cutting review time in half.

- Invoice AI can categorize thousands of transactions simultaneously instead of one by one.

| The Future of Confidence-Aware, Cost-Effective AI

As AI increasingly finds its way into enterprise workflows, estimation of confidence and caching alongside other optimization techniques will be important in ensuring optimal utilization of autonomous agents. By making it possible for AI to evaluate its uncertainty, companies will minimize mistakes, enhance interactions between AI and human teams, and ensure that insights developed by AI are utilized optimally.

Key Takeaways for Businesses

- Integrate Confidence Estimation: Make AI systems able to identify and convey uncertainty.

- Adopt Execution Policies: Permit AI to iterate on responses before making a final decision.

- Implement Caching Strategies: Leverage high-confidence AI outputs to eliminate redundant processing and save costs.

By adopting these strategies, companies can transcend the limitations of conventional AI automation and build smart, cost-effective systems that bring tangible value.

| Final Thoughts

AI should not only be intelligent—it must also know its own limitations, be cost-effective, and be optimized for real-world workflows. Confidence-aware AI, combined with caching and intelligent execution policies, raises the bar for enterprise efficiency.

Would confidence-aware AI and caching strategies benefit your organization? What have been your biggest challenges in AI-powered automation? Let’s talk about how these technologies can shape the future of enterprise AI.

Resources: Link