Blogs

Developing Advanced Drone Pilot Skills Through Unreal Engine-Based Simulation

The drone industry is soaring to new heights, demanding pilots navigate increasingly complex missions with unwavering precision and adaptability. Advanced drone pilot training is essential for scenarios like inspecting towering wind turbines or an oil rig, orchestrating intricate search-and-rescue operations in case of fire (e.g. forest fire) or natural calamities, or executing time-critical deliveries in bustling urban landscapes. These are the challenges facing today’s drone pilots, and traditional training methods are struggling to keep pace. That is where the power of simulation, fueled by the cutting-edge technology of Unreal Engine, is proving to be a great support.

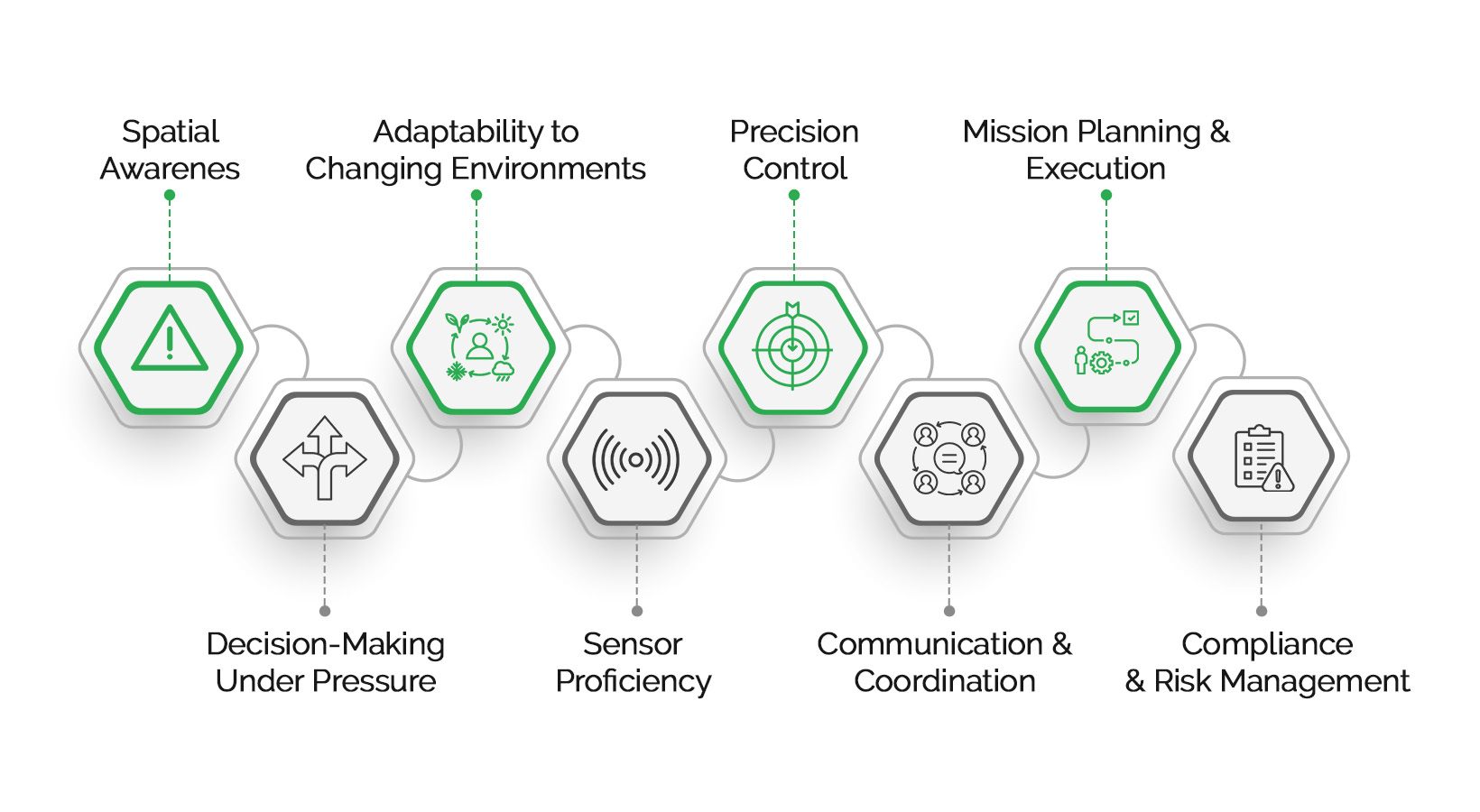

The pilots must possess a suite of advanced skills to operate effectively in diverse, high-stakes scenarios, ranging from industrial inspections to emergency rescue operations. These skills are critical for maximizing safety, meeting compliance requirements, and driving operational success.

This article explores eight key advanced skills that drone pilots can develop through simulation, their importance, and how Unreal Engine plays a pivotal role in fostering these capabilities.

Advances Skills (AS) – for Drone Pilots

AS1. Spatial Awareness

Drone pilots need to understand their surroundings in three dimensions to navigate effectively, avoid collisions, and operate in tight or complex environments such as urban areas, industrial zones, or natural terrains. Without strong spatial awareness, pilots are prone to accidents, potentially leading to costly equipment damage, operational delays, or regulatory violations.

By minimizing accidents the business can save thousands of dollars in equipment repairs and prevent reputational damage. It also ensures compliance with airspace regulations, avoiding fines or legal issues.

Unreal Engine’s high-fidelity rendering and real-time physics replicate real-world terrains and obstacles with great accuracy. Features like dynamic lighting and shadowing enhance depth perception, allowing trainees to develop strong spatial awareness in a variety of scenarios. For example, In an urban drone operation simulation, UE replicates narrow alleys, tall buildings casting dynamic shadows, and reflective surfaces like glass facades. This helps pilots practice navigating complex environments where spatial awareness is critical to avoid collisions.

AS2: Decision-Making Under Pressure

In critical missions, pilots must make split-second decisions, such as responding to equipment malfunctions or avoiding mid-air collisions. Poor decision-making can lead to mission failure, safety risks, or loss of valuable data.

Through better decision-making they can reduce the risk of operational disruptions, saving time and money while safeguarding human lives in high-stakes scenarios like search and rescue.

Unreal Engine’s AI-driven adaptive scenarios simulate real-time emergencies, such as sudden weather changes or sensor failures. These dynamic conditions force pilots to think quickly and test their responses under pressure. For example – in a simulated search-and-rescue mission, a bird strike is triggered mid-flight through UE’s AI Perception system. Pilots must decide whether to reroute, stabilize the drone, or land immediately—all in real-time.

AS3. Adaptability to Changing Environments

Real-world conditions can change rapidly—weather, air traffic, or operational priorities. Pilots need to adapt to these changes to complete missions safely and effectively.

Adaptable pilots are better equipped to handle unpredictable challenges, reducing downtime and improving operational efficiency.

Unreal Engine’s dynamic environment simulation generates changing conditions, such as evolving weather patterns or unexpected obstacles, teaching pilots to adapt seamlessly during missions. For example – during a simulated inspection of wind turbines, a sudden gust modeled by UE’s wind field system forces pilots to adapt their approach. Visibility is further impacted by volumetric fog, requiring adjustments in navigation strategy.

Tools like Sequencer allow precise control over environmental transitions, creating more dynamic and challenging training scenarios. For example if we intend to train for the foggy transition environment, where a pilot is conducting an aerial inspection then using Sequencer, a Developer can programmatically introduce a dense fog that rolls in mid-flight. This forces the pilot to adapt to rapidly changing visibility conditions, testing their ability to:

- Maintain situational awareness: Can they keep track of the drone’s position and orientation when visual cues are obscured?

- Interpret sensor data: Do they rely more heavily on instruments and sensor data (like lidar or radar) to navigate safely?

- Make critical decisions: Do they choose to abort the mission, proceed cautiously, or employ alternative navigation strategies?

AS4. Sensor Proficiency

Modern drones rely heavily on advanced sensors like lidar, GPS, and thermal cameras. Pilots must know how to interpret sensor data and act on it efficiently. Accurate sensor use leads to higher-quality outputs, such as detailed inspection reports or precise search-and-rescue efforts, directly impacting mission success and client satisfaction.

Unreal Engine’s sensor simulation capabilities replicate real-world sensor behaviors, enabling pilots to practice interpreting data in realistic conditions. For example – a thermal camera simulation overlays heat signatures on a dark environment, helping pilots practice identifying targets during a simulated wildfire rescue mission.

Furthermore, Unreal Engine allows developers to create custom HUD widgets that mimic real-world sensor dashboards. These widgets can display:

- Real-time sensor readouts: This includes numerical data from various sensors like altitude, speed, battery level, GPS coordinates, and obstacle proximity warnings.

- Visual sensor feeds: Widgets can display the feed from virtual cameras, including standard RGB, thermal, and even simulated lidar point clouds.

- Interactive elements: Pilots can interact with some widgets, such as toggling between different camera views or adjusting sensor settings, just like they would with a real drone’s control interface.

AS5. Precision Control

Certain tasks, such as infrastructure inspections or package deliveries, require pilots to operate drones with pinpoint accuracy. A lack of precision can lead to incomplete tasks, rework, or damaged assets.

Precision reduces operational costs by minimizing errors and ensuring timely task completion, which translates to improved customer satisfaction.

Unreal Engine’s accurate flight physics simulate real-world drone dynamics, allowing pilots to practice fine control in scenarios that demand extreme accuracy. For example – in a simulation for inspecting power lines, UE ensures realistic drone responsiveness when pilots practice hovering steadily near a moving cable car. The Chaos engine ensures the drone’s drift under simulated wind conditions feels authentic.

AS6. Communication and Coordination

Pilots often need to work in teams or coordinate with ground staff, air traffic control, or other stakeholders. By enabling realistic teamwork through synchronized simulations, trainees can practice coordination and communication in shared scenarios like search and rescue missions. This fosters a shared situational awareness, ensuring all participants have a common understanding of the environment and can make effective decisions as a team. Furthermore, the ability to observe different approaches and strategies enhances the learning process, allowing trainees to learn from each other’s successes and challenges.

Improved coordination reduces the risk of miscommunication-related incidents, improving safety and efficiency while maintaining regulatory compliance.

Unreal Engine supports multi-user simulations, enabling pilots to train collaboratively, practice teamwork, and refine communication skills in realistic scenarios. For example – in a multi-operator simulation, one pilot navigates a drone while another monitors sensor data. UE allows real-time interaction between both roles in a shared environment, fostering effective teamwork.

Using UE’s Replication System the Developers can ensure all connected clients (trainees) see a consistent and synchronized version of the simulation. This system:

- Synchronizes drone positions and movements: Each trainee sees the other drones in the simulation moving in real-time, accurately reflecting their positions, orientations, and actions.

- Replicates environmental changes: If one trainee triggers an event (like a weather change or obstacle appearance), it’s replicated for all other trainees, ensuring a shared experience.

- Manages latency: Unreal Engine employs techniques to minimize latency, ensuring that actions and reactions appear smooth and responsive for all participants, even with varying network conditions.

AS7. Mission Planning and Execution

Successful missions require careful planning, from mapping routes to estimating fuel or battery consumption. Pilots must anticipate challenges and execute plans effectively.

Effective mission planning reduces the likelihood of mid-operation failures, saving time and resources.

Unreal Engine’s scenario customization allows pilots to simulate missions from start to finish, including route planning, pre-flight checks, and post-flight analysis. For example – in a long-range survey mission, UE streams a coastal terrain while dynamically adjusting waypoints based on battery consumption and wind resistance, giving pilots hands-on experience in managing mission parameters.

Unreal Engine allows for far more than just static mission planning. It can simulate dynamic factors that force pilots to adapt and make real-time decisions, mirroring the challenges of real-world missions. Here’s how:

- Environmental changes: Simulate unexpected weather changes (like the fog example mentioned earlier), wind gusts, or even temporary loss of GPS signal, forcing pilots to adjust their plans on the fly.

- Equipment limitations: Realistically simulate battery consumption, payload capacity, and flight range, requiring pilots to optimize routes and manage resources effectively.

- Unexpected events: Introduce unexpected obstacles or airspace intrusions, prompting pilots to reassess their plans and make quick decisions to ensure mission success and safety.

AS8. Compliance and Risk Management

Drone operations are governed by strict regulations, and pilots must understand airspace restrictions, safety protocols, and risk mitigation strategies.

Ensuring compliance avoids hefty fines and legal issues, while robust risk management minimizes operational disruptions.

Unreal Engine’s simulation accuracy includes replicating restricted airspaces, regulatory conditions, and operational risks, helping pilots practice compliant and safe operations. For example – in a compliance-focused simulation, crossing a geofenced area triggers an alert and reduces drone functionality, forcing the pilot to retreat. This ensures trainees are aware of regulatory boundaries and the consequences of violations.

Unreal Engine’s trigger volumes are invisible 3D volumes that can be placed in the virtual environment to define specific areas or zones. When a drone enters or exits a trigger volume, it can activate various actions, making it ideal for simulating geofencing rules and restricted airspaces. For example:

- No-fly zones: Define trigger volumes around airports, military bases, or other sensitive areas. When a drone enters these zones, the simulation can: Issue visual and auditory warnings: Alert the pilot of the violation. Restrict drone functionality: Automatically limit altitude, speed, or even force the drone to land or return to home. Log the violation: Record the incident for post-flight analysis and debriefing.

- Altitude restrictions: Create trigger volumes that enforce altitude limits in specific areas. When a drone flies above the defined altitude, the simulation can trigger similar warnings and restrictions.

- Temporary flight restrictions (TFRs): Simulate dynamic TFRs that might be imposed due to events like wildfires or sporting events. Trigger volumes can be activated or deactivated in real-time to reflect these changing restrictions.

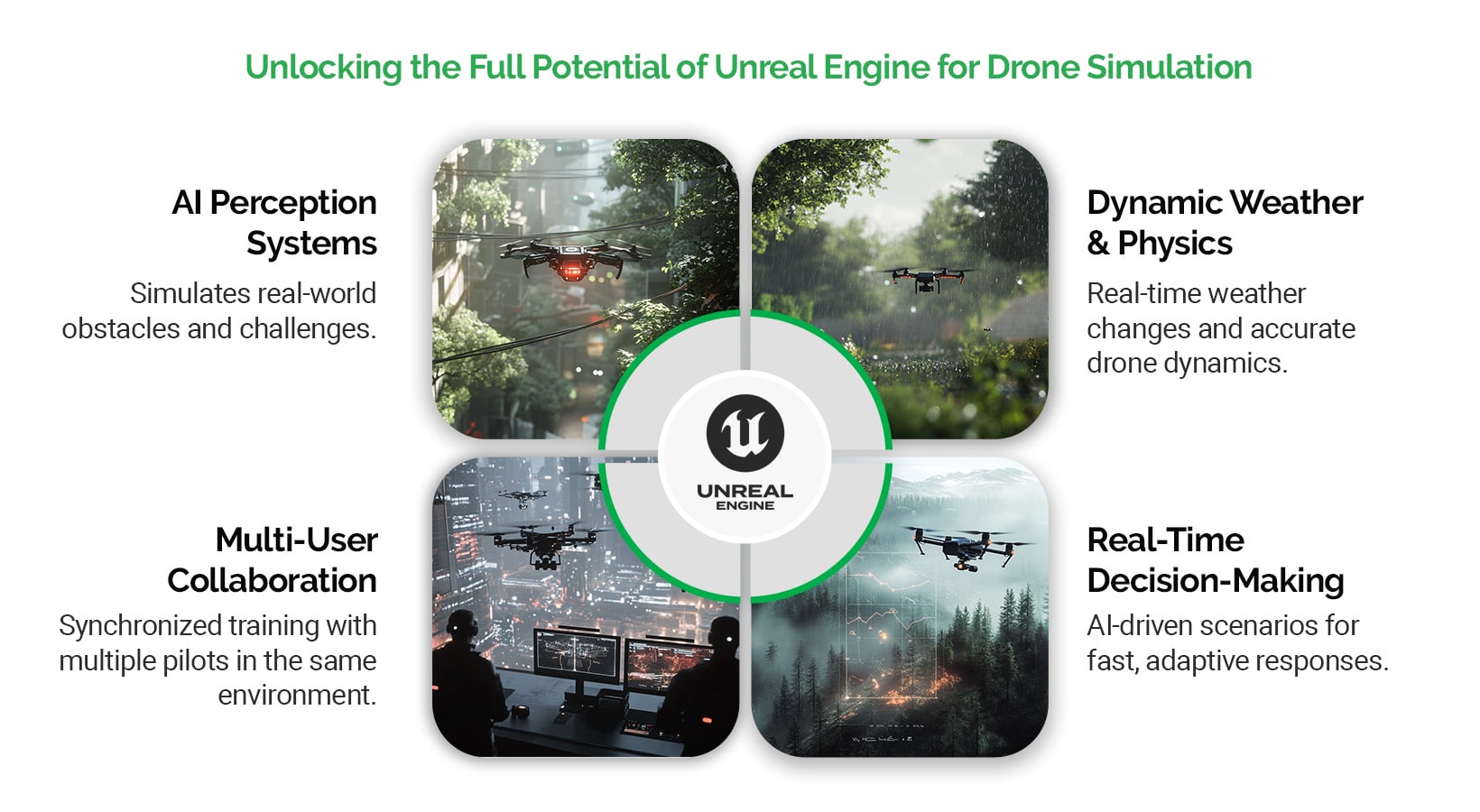

UE Capabilities that help with these Advanced Skills

- High-Fidelity Rendering: Unreal Engine’s Nanite Virtualized Geometry allows the creation of incredibly detailed terrains, buildings, and obstacles without performance loss.

- Dynamic Lighting and Shadows: Using Lumen, UE delivers realistic lighting conditions and reflections, improving depth perception and visual clarity.

- Blueprint Visual Scripting: Allows developers to create real-time decision-triggering events without needing extensive coding.

- AI Perception Systems: UE’s AI-Powered Scenarios simulate dynamic challenges like sudden obstacles or airspace intrusions. This system allows the drone in the simulation to perceive its environment through virtual sensors like cameras, lidar, and radar. It can detect and classify objects, such as obstacles, other aircraft, or even birds, and provide this information to the drone’s “brain” (the AI controller).

- Behavior Trees: These are visual scripting tools that define the drones decision-making logic. They allow developers to create branching decision trees with different actions and conditions. For example, if the AI Perception System detects a bird approaching, the Behavior Tree can trigger a series of actions – e.g. Assessing the Threat (Is the bird on a collision course? How fast is it approaching?), Prioritize Actions (Should the drone attempt to evade, change altitude, or hover in place?) or Execute the chosen maneuver (trigger the appropriate flight controls based on the chosen action). UE allows for the creation of Dynamic Events, such as sudden wind gusts, GPS signal loss, or airspace intrusions. These events can trigger specific branches in the Behavior Tree, forcing trainees to react quickly and adapt to changing circumstances.

- Weighted Branches: Developers can assign weights or probabilities to different branches in the Behavior Tree. This forces trainees to make difficult choices, prioritizing actions based on sensor data, environmental factors, and the severity of the threat. For example, evading an oncoming aircraft might have a higher priority than avoiding a bird strike.

- NavMesh: In Simulation, NavMesh could be used to define 3D flight paths and waypoints for trainees to follow, helping them practice precision maneuvering and navigation within a virtual environment.

- Dynamic Weather Systems: UE supports weather changes using volumetric clouds, realistic rain physics, and wind simulations integrated through the Chaos Physics Engine.

- Sequencer Tool: Allows developers to program dynamic changes in the environment, such as evolving weather or moving obstacles.

- Custom Blueprint Systems: Developers can replicate drone sensors like lidar, GPS, and thermal cameras, including their response to environmental inputs. Furthermore, customizable systems can simulate pre-flight route planning and optimization.

- Post-Processing Effects: Simulates thermal or night-vision camera feeds with UE’s post-processing materials.

- Chaos Physics Engine: Provides high-precision physics calculations, accurately simulating drone dynamics such as thrust, drag, and inertia.

- Input Handling: UE supports customizable input mapping, allowing integration with real-world drone controllers for realistic handling.

- Multi-User Editing and Networking: UE’s Pixel Streaming and Multi-User Editor enable collaborative training sessions where multiple pilots or ground crews operate in the same virtual environment.

- In-World Messaging Systems: Using UE widgets, in-world communication cues or task notifications can be simulated. The simulation can provide immediate feedback on the trainee’s decisions, highlighting the consequences of their actions and reinforcing correct procedures.

- World Composition Tool: Enables large-scale terrains for long-range mission simulations.

- Level Streaming: Allows seamless loading of new areas as the drone progresses, ensuring continuous and realistic mission execution.

- Geofencing Simulation: UE can replicate restricted airspaces using invisible boundary volumes and trigger warnings when a drone crosses them.

- Physics-Based Failures: Simulated risks like sensor failures or power depletion using UE’s physics engine enhance pilots’ ability to manage real-world risks.

- Replication System: Unreal Engine has a robust replication system that ensures all connected clients (trainees) see a consistent and synchronized version of the simulation.

Conclusion

The skills developed through Unreal Engine-based simulation are not just theoretical—they directly translate to improved operational outcomes, reduced costs, and increased safety. UE’s technical capabilities, from dynamic environments and AI systems to real-time physics and sensor simulation, make it an unparalleled tool for developing advanced drone pilot skills. These features ensure pilots can train in a highly realistic and immersive environment, mastering the skills needed to excel in complex real-world missions while minimizing risks and costs.

WalkingTree’s expertise in leveraging these capabilities ensures organizations achieve unmatched training outcomes, preparing pilots to meet the demands of the evolving drone industry confidently. By investing in advanced simulation solutions, organizations can produce elite drone pilots equipped to handle the complexities of modern operations, ensuring mission success while maximizing safety and profitability.

At WalkingTree Technologies, we leverage Unreal Engine to create tailored training solutions that deliver desired results. If you are ready to elevate your Drone Pilot Training Training program then let’s connect!