In this blog, we will discuss some general challenges with Machine Learning models, why we need model monitoring and different ways of implementing the same. We will also discuss different aspects of Amazon SageMaker Model Monitor, the AWS Service for Fully Managed Automatic Monitoring of Machine Learning Models.

The blog is organized as follows:

- Introduction

- Nature of Real-life data

- Impact on Machine Learning Models

- Monitoring Machine Learning Models

- Amazon SageMaker Model Monitor

- Types of Model Monitoring using Model Monitor

- How to Use Model Monitor

- End-to-End Workflow of Model Monitoring

Introduction

If data is the new oil and machine learning models are the engine of the future, data availability and quality become supercritical for a successful journey..

ML models play a significant role in driving business decisions, and unless they are driven by good quality data, they can have a disastrous impact on business.

It is not uncommon to hear how machine learning models fail, even when they are developed and deployed after multiple rounds of training and testing.

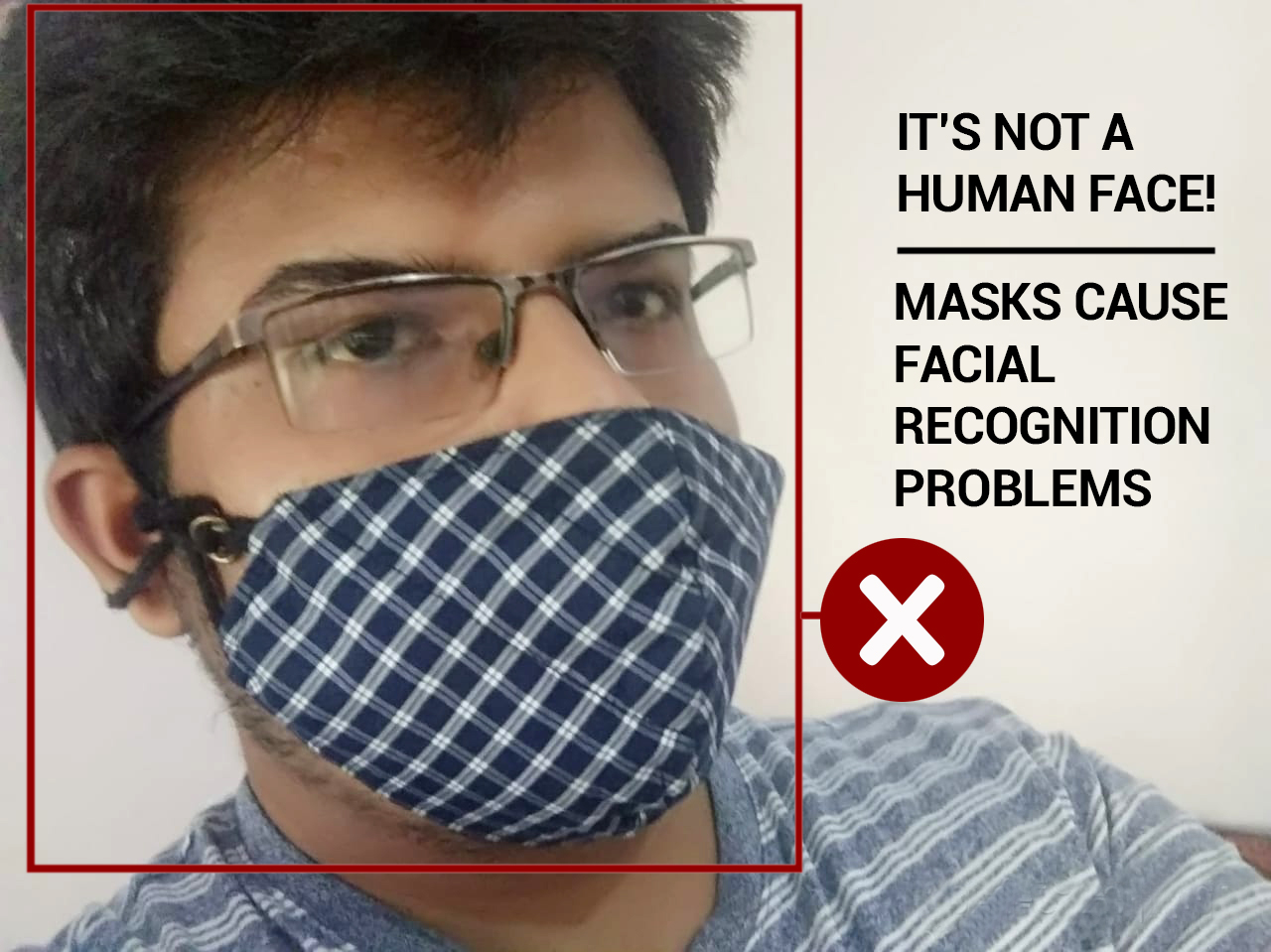

Ever had a case where a face detection system could not detect your face, or a traffic sign detection system miscalculating ? Ever noticed your ML model throwing out weird results ? Have you ever had problems caused by unexpected NULL values and by exotic character encodings?

In a COVID-affected world, and one with great political and market volatility, constant change is the norm, and not the exception, in most domains. Given this, ML models need to be constantly monitored and retrained.

Let us discuss why this happens in greater detail.

Nature of real-life data

Machine Learning models are generally developed using carefully curated datasets and tested on equally clean datasets, but the nature of data they get in production can be very different statistically and can lead to unexpected results/predictions.

This divergence in data can occur due to a wide variety of reasons:

1. The conditions in which the data is collected change, resulting in a change in the nature of the data itself.

For example, a face detection system may be trained on faces without masks and may end up not detecting people wearing masks.

Similarly, an object detection system may be trained on a dataset taken during summer, but it won’t perform as well in winter as the objects might be covered with snow.

2. A systematic flaw in data collection and labelling will definitely lead to undesired results. Data will, after all, be measured through instruments/sensors and if the latter age, their readings would be inaccurate.

3. The statistical distribution of data used to train the model may change drastically over time, and this can later result in model bias.

This can happen, for example, with changes in demographics of a region or distribution of different kinds of samples. For example, if we train a model for detecting chronic bronchitis mainly on non-smokers, the results may be very different when the model is trained on a population containing a significant chunk of smokers.

4. Some of the data may be new. For example, there will be a need to update the autonomous driving model for vehicles so that new objects on the road can be detected.

Impact on Machine Learning Models

All this can lead to deterioration of model predictions/accuracy over time. This is known as ‘Model drift’. More precisely, model drift is the deterioration in performance of a model caused by changes in data and relationships between input and output variables.

Model drift can be caused by:

1. Data drift

This happens when the model’s input distribution changes. This does not mean a change in the relationship between the input variables and the output. The degradation in performance happens because the data the model sees in real-time is statistically different from what it was trained on.

The chronic bronchitis model mentioned above is an example of data drift.

2. Concept drift

Concept drift occurs when the relationship between the input variables and the output changes. This generally happens because of an external factor.

For example, a model for predicting organization’s profits before COVID-19 would not hold during/after the pandemic.

Since different kinds of drifts can cause deterioration in model performance, it becomes critical to monitor ML models.

Monitoring Machine Learning Models

There are two ways of monitoring Machine Learning models.

1. Manual Model Monitoring: In this, all the steps are implemented by the MLOps engineers themselves. These include:

(a) Capturing data received by models.

(b) Running different kinds of statistical analysis for comparing data received by model to the training set.

(c) Defining rules for drift detection.

(d) Sending alerts if the conditions are satisfied.

The main limitation of this approach is that it requires expert MLOps engineers and is time-consuming. Furthermore, the entire sequence of steps needs to be repeated every time the models get updated.

2. Managed Automatic Model Monitoring Service: These services do the heavy lifting for you, and enable you to focus on what matters the most – value creation for customers.

In this blog, we discuss the AWS solution for the same – Amazon SageMaker Model Monitor. Let us discuss this in detail.

Amazon SageMaker Model Monitor

As mentioned above, SageMaker Model Monitor is AWS’s Fully Managed Automatic Monitoring Service for Machine Learning Models.

Model Monitor helps in continuous monitoring of Machine Learning models as it :

(a) detects data and concept drift in real-time.

(b) sends alerts to take immediate action.

(c) monitors model performance characteristics such as accuracy so that action can be taken to address anomalies.

Besides, Model Monitor is also integrated with another AWS service, Amazon SageMaker Clarify to:

(a) Help identify potential bias in ML models.

(b) Configure alerting systems such as Amazon Cloudwatch for notification, in case the model begins to develop bias.

The metrics generated by Model Monitor can also be viewed in Amazon SageMaker Studio. This makes it possible to visually analyze model performance without the need to write any additional piece of code. Furthermore, it is also integrated with visualization tools like Tableau, Amazon QuickSight, and Tensorboard.

Types of Model Monitoring using Model Monitor

Amazon SageMaker Model Monitor provides the following types of monitoring :

(a) Monitor Data Quality: Monitor drift in data quality. The input may be desired to have certain statistical properties. For example, the model may expect values for some features to be non-negative and within a specific range. For example, in a model for predicting heart disease, age has to be non-negative and should not be, generally speaking, more than 100.

Similarly, the output values may also be restricted. For example, we may expect the day temperature to be between 30 degrees celsius and 55 degrees celsius in certain parts of India during the summer season, and if the model outputs a value of 5 degrees, it will be alerted as an anomaly.

(b) Monitor Model Quality: Monitor drift in model quality metrics, such as accuracy. A drop in model performance can have a significant business impact, and must be avoided. Model performance is generally calculated based on certain metrics.

The metrics chosen depends on the kind of ML problem. For example, if the model is for regression, mean square error is one of the metrics that are evaluated. For a binary classification, precision, recall and f1 score are some of the evaluated metrics.

For example, in an email spam detection case, if the precision falls below a threshold, or in a fraud detection case, if the recall falls below a certain threshold, it indicates a serious degradation in model performance and appropriate alerts need to be issued for the same.

(c) Monitor Bias Drift for Models in Production: Monitor bias in model’s predictions. A model with biased predictions can also have disastrous outcomes for the business. For example, a model for processing loan requests may end up rejecting a valid request, and accept an invalid one, or a machine learning model for filtering resumes may be excluding candidates based on race, color, gender, etc. It is critical that the bias is detected, so that corrective action can be taken.

(d) Monitor Feature Attribution Drift for Models in Production: Monitor drift in feature attribution. This happens when the feature attribution values change because of a drift in distribution of live data, say due to external factors. For example, in a student ranking/scoring system, if the attribution of different features like marks, attitude and attendance changes, it becomes a case of feature attribution drift. Feature attribution drift cases need to be looked at individually, but first of all, alerts should be raised for the same.

How to Use Model Monitor

The following steps need to be taken for using Amazon SageMaker Model Monitor.

1. The endpoint to the model needs to be enabled to capture data from the incoming requests and also the resulting model predictions.

2. A baseline, with metrics and suggested constraints, should be created from the training dataset. If the real-time predictions from the model are outside the constrained values, they should be reported

as violations.

3. A monitoring schedule should be created specifying the data to be collected and the frequency of collection. The way of analysis and the reports to be produced should also be specified.

End-to-End Workflow of Model Monitoring :

To understand the end-to-end workflow of Model Monitoring, and get an in-depth understanding of different kinds of drifts, join us in our upcoming webinar on ‘Monitoring Machine Learning Models using Amazon SageMaker Model Monitor’, where we illustrate the same using an Industry use-case implementation.

Reference: